Profile

|

Publications

Automatic region-growing system for the segmentation of large point clouds

This article describes a complete unsupervised system for the segmentation of massive 3D point clouds. Our system bridges the missing components that permit to go from 99% automation to 100% automation for the construction industry. It scales up to billions of 3D points and targets a generic low-level grouping of planar regions usable by a wide range of applications. Furthermore, we introduce a hierarchical multi-level segment definition to cope with potential variations in high-level object definitions. The approach first leverages planar predominance in scenes through a normal-based region growing. Then, for usability and simplicity, we designed an automatic heuristic to determine without user supervision three RANSAC-inspired parameters. These are the distance threshold for the region growing, the threshold for the minimum number of points needed to form a valid planar region, and the decision criterion for adding points to a region. Our experiments are conducted on 3D scans of complex buildings to test the robustness of the “one-click” method in varying scenarios. Labelled and instantiated point clouds from different sensors and platforms (depth sensor, terrestrial laser scanner, hand-held laser scanner, mobile mapping system), in different environments (indoor, outdoor, buildings) and with different objects of interests (AEC-related, BIM-related, navigation-related) are provided as a new extensive test-bench. The current implementation processes ten million points per minutes on a single thread CPU configuration. Moreover, the resulting segments are tested for the high-level task of semantic segmentation over 14 classes, to achieve an F1-score of 90+ averaged over all datasets while reducing the training phase to a fraction of state of the art point-based deep learning methods. We provide this baseline along with six new open-access datasets with 300+ million hand-labelled and instantiated 3D points at: https://www.graphics.rwth-aachen.de/project/ 45/.

@article{POUX2022104250,

title = {Automatic region-growing system for the segmentation of large point clouds},

journal = {Automation in Construction},

volume = {138},

pages = {104250},

year = {2022},

issn = {0926-5805},

doi = {https://doi.org/10.1016/j.autcon.2022.104250},

url = {https://www.sciencedirect.com/science/article/pii/S0926580522001236},

author = {F. Poux and C. Mattes and Z. Selman and L. Kobbelt},

keywords = {3D point cloud, Segmentation, Region-growing, RANSAC, Unsupervised clustering}

}

High-Fidelity Point-Based Rendering of Large-Scale 3D Scan Datasets

Digitalization of 3D objects and scenes using modern depth sensors and high-resolution RGB cameras enables the preservation of human cultural artifacts at an unprecedented level of detail. Interactive visualization of these large datasets, however, is challenging without degradation in visual fidelity. A common solution is to fit the dataset into available video memory by downsampling and compression. The achievable reproduction accuracy is thereby limited for interactive scenarios, such as immersive exploration in Virtual Reality (VR). This degradation in visual realism ultimately hinders the effective communication of human cultural knowledge. This article presents a method to render 3D scan datasets with minimal loss of visual fidelity. A point-based rendering approach visualizes scan data as a dense splat cloud. For improved surface approximation of thin and sparsely sampled objects, we propose oriented 3D ellipsoids as rendering primitives. To render massive texture datasets, we present a virtual texturing system that dynamically loads required image data. It is paired with a single-pass page prediction method that minimizes visible texturing artifacts. Our system renders a challenging dataset in the order of 70 million points and a texture size of 1.2 terabytes consistently at 90 frames per second in stereoscopic VR.

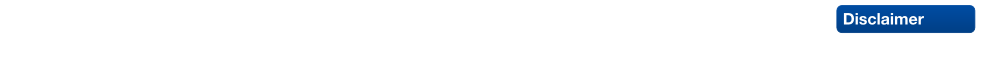

Unsupervised Segmentation of Indoor 3D Point Cloud: Application to Object-based Classification

Point cloud data of indoor scenes is primarily composed of planar-dominant elements. Automatic shape segmentation is thus valuable to avoid labour intensive labelling. This paper provides a fully unsupervised region growing segmentation approach for efficient clustering of massive 3D point clouds. Our contribution targets a low-level grouping beneficial to object-based classification. We argue that the use of relevant segments for object-based classification has the potential to perform better in terms of recognition accuracy, computing time and lowers the manual labelling time needed. However, fully unsupervised approaches are rare due to a lack of proper generalisation of user-defined parameters. We propose a self-learning heuristic process to define optimal parameters, and we validate our method on a large and richly annotated dataset (S3DIS) yielding 88.1% average F1-score for object-based classification. It permits to automatically segment indoor point clouds with no prior knowledge at commercially viable performance and is the foundation for efficient indoor 3D modelling in cluttered point clouds.

@Article{poux2020b,

author = {Poux, F. and Mattes, C. and Kobbelt, L.},

title = {UNSUPERVISED SEGMENTATION OF INDOOR 3D POINT CLOUD: APPLICATION TO OBJECT-BASED CLASSIFICATION},

journal = {ISPRS - International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences},

volume = {XLIV-4/W1-2020},

year = {2020},

pages = {111--118},

url = {https://www.int-arch-photogramm-remote-sens-spatial-inf-sci.net/XLIV-4-W1-2020/111/2020/},

doi = {10.5194/isprs-archives-XLIV-4-W1-2020-111-2020}

}

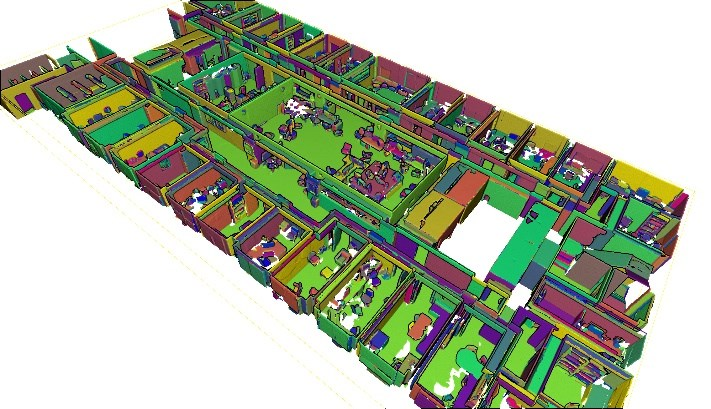

Initial User-Centered Design of a Virtual Reality Heritage System: Applications for Digital Tourism

Reality capture allows for the reconstruction, with a high accuracy, of the physical reality of cultural heritage sites. Obtained 3D models are often used for various applications such as promotional content creation, virtual tours, and immersive experiences. In this paper, we study new ways to interact with these high-quality 3D reconstructions in a real-world scenario. We propose a user-centric product design to create a virtual reality (VR) application specifically intended for multi-modal purposes. It is applied to the castle of Jehay (Belgium), which is under renovation, to permit multi-user digital immersive experiences. The article proposes a high-level view of multi-disciplinary processes, from a needs analysis to the 3D reality capture workflow and the creation of a VR environment incorporated into an immersive application. We provide several relevant VR parameters for the scene optimization, the locomotion system, and the multi-user environment definition that were tested in a heritage tourism context.

@article{poux2020a,

title={Initial User-Centered Design of a Virtual Reality Heritage System: Applications for Digital Tourism},

volume={12},

ISSN={2072-4292},

url={http://dx.doi.org/10.3390/rs12162583},

DOI={10.3390/rs12162583},

number={16},

journal={Remote Sensing},

publisher={MDPI AG},

author={Poux, Florent and Valembois, Quentin and Mattes, Christian and Kobbelt, Leif and Billen, Roland},

year={2020},

month={Aug},

pages={2583}

}