Profile

|

Publications

MobileVideoTiles: Video Display on Multiple Mobile Devices

Modern mobile phones can capture and process high quality videos, which makes them a very popular tool to create and watch video content. However when watching a video together with a group, it is not convenient to watch on one mobile display due to its small form factor. One idea is to combine multiple mobile displays together to create a larger interactive surface for sharing visual content. However so far a practical framework supporting synchronous video playback on multiple mobile displays is still missing. We present the design of “MobileVideoTiles”, a mobile application that enables users to watch local or online videos on a big virtual screen composed of multiple mobile displays. We focus on improving video quality and usability of the tiled virtual screen. The major technical contributions include: mobile peer-to-peer video streaming, playback synchronization, and accessibility of video resources. The prototype application has got several thousand downloads since release and re ceived very positive feedback from users.

ACTUI: Using Commodity Mobile Devices to Build Active Tangible User Interfaces

We present the prototype design for a novel user interface, which extends the concept of tangible user interfaces from mostly specialized hardware components and studio deployment to commodity mobile devices in daily life. Our prototype enables mobile devices to be components of a tangible interface where each device can serve as both, a touch sensing display and as a tangible item for interaction. The only necessary modification is the attachment of a conductive 2D touch pattern on each device. Compared to existing approaches, our Active Commodity Tangible User Interfaces (ACTUI) can display graphical output directly on their built-in display paving the way to a plethora of innovative applications where the diverse combination of local and global active display area can significantly enhance the flexibility and effectiveness of the interaction. We explore two exemplary application scenarios where we demonstrate the potential of ACTUI.

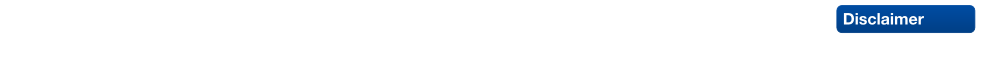

Influence of Temporal Delay and Display Update Rate in an Augmented Reality Application Scenario

In mobile augmented reality (AR) applications, highly complex computing tasks such as position tracking and 3D rendering compete for limited processing resources. This leads to unavoidable system latency in the form of temporal delay and reduced display update rates. In this paper we present a user study on the influence of these system parameters in an AR point'n'click scenario. Our experiment was conducted in a lab environment to collect quantitative data (user performance as well as user perceived ease of use). We can show that temporal delay and update rate both affect user performance and experience but that users are much more sensitive to longer temporal delay than to lower update rates. Moreover, we found that the effects of temporal delay and update rate are not independent as with longer temporal delay, changing update rates tend to have less impact on the ease of use. Furthermore, in some cases user performance can actually increase when reducing the update rate in order to make it compatible to the latency. Our findings indicate that in the development of mobile AR applications, more emphasis should be put on delay reduction than on update rate improvement and that increasing the update rate does not necessarily improve user performance and experience if the temporal delay is significantly higher than the update interval.

@inproceedings{Li:2015:ITD:2836041.2836070,

author = {Li, Ming and Arning, Katrin and Vervier, Luisa and Ziefle, Martina and Kobbelt, Leif},

title = {Influence of Temporal Delay and Display Update Rate in an Augmented Reality Application Scenario},

booktitle = {Proceedings of the 14th International Conference on Mobile and Ubiquitous Multimedia},

series = {MUM '15},

year = {2015},

isbn = {978-1-4503-3605-5},

location = {Linz, Austria},

pages = {278--286},

numpages = {9},

url = {http://doi.acm.org/10.1145/2836041.2836070},

doi = {10.1145/2836041.2836070},

acmid = {2836070},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {display update rate, ease of use, latency, mobile augmented reality, perception tolerance, point'n'click, temporal delay, user study},

}

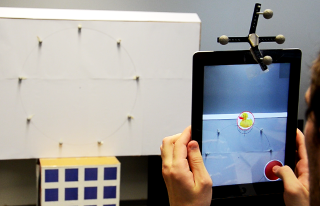

Evaluation of a Mobile Projector based Indoor Navigation Interface

In recent years, the interest and potential applications of pedestrian indoor navigation solutions have significantly increased. Whereas the majority of mobile indoor navigation aid solutions visualize navigational information on mobile screens, the present study investigates the effectiveness of a mobile projector as navigation aid which directly projects navigational information into the environment. A benchmark evaluation of the mobile projector-based indoor navigation interface was carried out investigating a combination of different navigation devices (mobile projector vs. mobile screen) and navigation information (map vs. arrow) as well as the impact of users' spatial abilities. Results showed a superiority of the mobile screen as navigation aid and the map as navigation information type. Especially users with low spatial abilities benefited from this combination in their navigation performance and acceptance. Potential application scenarios and design implications for novel indoor navigation interfaces are derived from our findings.

ProFi: Design and Evaluation of a Product Finder in a Supermarket Scenario

This paper presents the design and evaluation of ProFi, a PROduct FInding assistant in a supermarket scenario. We explore the idea of micro-navigation in supermarkets and aim at enhancing visual search processes in front of a shelf. In order to assess the concept, a prototype is built combining visual recognition techniques with an Augmented Reality interface. Two AR patterns (circle and spotlight) are designed to highlight target products. The prototype is formally evaluated in a controlled environment. Quantitative and qualitative data is collected to evaluate the usability and user preference. The results show that ProFi significantly improves the users’ product finding performance, especially when using the circle, and that ProFi is well accepted by users.

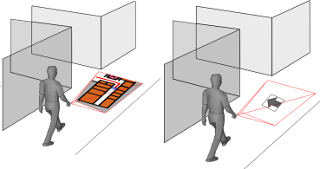

Dynamic Tiling Display: Building an Interactive Display Surface using Multiple Mobile Devices

Table display surfaces, like Microsoft PixelSense, can display multimedia content to a group of users simultaneously, but it is expensive and lacks mobility. On the contrary, mobile devices are more easily available, but due to limited screen size and resolution, they are not suitable for sharing multimedia data interactively. In this paper we present a "Dynamic Tiling Display", an interactive display surface built from mobile devices. Our framework utilizes the integrated front facing camera of mobile devices to estimate the relative pose of multiple mobile screens arbitrarily placed on a table. Using this framework, users can create a large virtual display where multiple users can explore multimedia data interactively through separate windows (mobile screens). The major technical challenge is the calibration of individual displays, which is solved by visual object recognition using front facing camera inputs.

Best Paper Award in MUM12

Insights into user experiences and acceptance of mobile indoor navigation devices

Location-based services, which can be applied in navigation systems, are a key application in mobile and ubiquitous computing. Combined with indoor localization techniques, pico projectors can be used for navigation purposes to augment the environment with navigation information. In the present empirical study (n = 24) we explore users’ perceptions, workload and navigation performance when navigating with a mobile projector in comparison to a mobile screen as indoor navigation interface. To capture user perceptions and to predict acceptance by applying structural equation modeling, we assessed perceived disorientation, privacy concerns, trust, ease of use, usefulness, and sources of visibility problems. Moreover, the impact of user factors (spatial abilities, technical self-efficacy, familiarity) on acceptance was analyzed. The structural models exhibited adequate predictive and psychometric properties. Based on real user experience, they clearly pointed out a) similarities and device-specific differences in navigation device acceptance, b) the role of specific user experiences (visibility, trust, and disorientation) during navigation device usage and c) illuminated the underlying relationships between determinants of user acceptance. Practical implications of the results and future research questions are provided.

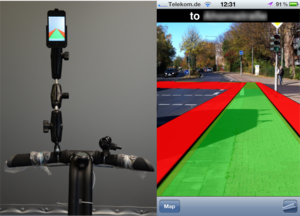

The Design of a Segway AR-Tactile Navigation System

A Segway is often used to transport a user across mid range distances in urban environments. It has more degrees of freedom than car/bike and is faster than pedestrian. However a navigation system designed for it has not been researched. The existing navigation systems are adapted for car drivers or pedestrians. Using such systems on the Segway can increase the driver’s cognitive workload and generate safety risks. In this paper, we present a Segway AR-Tactile navigation system, in which we visualize the route through an Augmented Reality interface displayed by a mobile phone. The turning instructions are presented to the driver via vibro-tactile actuators attached to the handlebar. Multiple vibro-tactile patterns provide navigation instructions. We evaluate the system in real traffic and an artificial environment. Our results show the AR interface reduces users’ subjective workload significantly. The vibro-tactile patterns can be perceived correctly and greatly improve the driving performance.

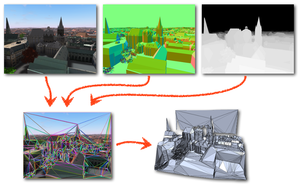

Pseudo-Immersive Real-Time Display of 3D Scenes on Mobile Devices

The display of complex 3D scenes in real-time on mobile devices is difficult due to the insufficient data throughput and a relatively weak graphics performance. Hence, we propose a client-server system, where the processing of the complex scene is performed on a server and the resulting data is streamed to the mobile device. In order to cope with low transmission bitrates, the server sends new data only with a framerate of about 2 Hz. However, instead of sending plain framebuffers, the server decomposes the geometry represented by the current view's depth profile into a small set of textured polygons. This processing does not require the knowledge of geometries in the scene, i.e. the outputs of Time-of-flight camera can be handled as well. The 2.5D representation of the current frame allows the mobile device to render plausibly distorted views of the scene at high frame rates as long as the viewing direction does not change too much before the next frame arrives from the server. In order to further augment the visual experience, we use the mobile device's built-in camera or gyroscope to detect the spatial relation between the user's face and the device, so that the camera view can be changed accordingly. This produces a pseudo-immersive visual effect. Besides designing the overall system with a render-server, 3D display client, and real-time face/pose detection, our main technical contribution is a highly efficient algorithm that decomposes a frame buffer with per-pixel depth and normal information into a small set of planar regions which can be textured with the current frame. This representation is simple enough for realtime display on today's mobile devices.

Ad-Hoc Multi-Displays for Mobile Interactive Applications

We present a framework which enables the combination of different mobile devices into one multi-display such that visual content can be shown on a larger area consisting, e.g., of several mobile phones placed arbitrarily on the table. Our system allows the user to perform multi-touch interaction metaphors, even across different devices, and it guarantees the proper synchronization of the individual displays with low latency. Hence from the user’s perspective the heterogeneous collection of mobile devices acts like one single display and input device. From the system perspective the major technical and algorithmic challenges lie in the co-calibration of the individual displays and in the low latency synchronization and communication of user events. For the calibration we estimate the relative positioning of the displays by visual object recognition and an optional manual calibration step.