Profile

|

Prof. Dr. Leif Kobbelt |

Publications

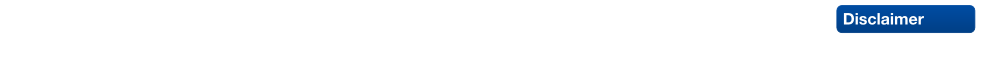

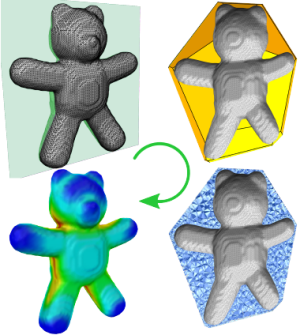

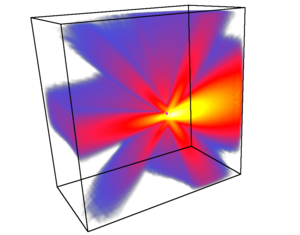

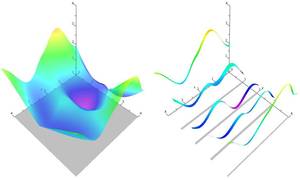

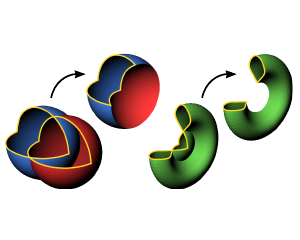

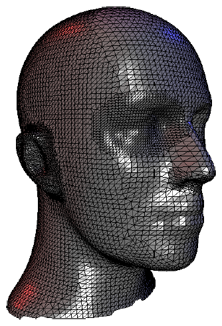

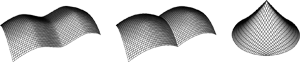

Neural Implicit Shape Editing Using Boundary Sensitivity

Neural fields are receiving increased attention as a geometric representation due to their ability to compactly store detailed and smooth shapes and easily undergo topological changes. Compared to classic geometry representations, however, neural representations do not allow the user to exert intuitive control over the shape. Motivated by this, we leverage boundary sensitivity to express how perturbations in parameters move the shape boundary. This allows to interpret the effect of each learnable parameter and study achievable deformations. With this, we perform geometric editing: finding a parameter update that best approximates a globally prescribed deformation. Prescribing the deformation only locally allows the rest of the shape to change according to some prior, such as semantics or deformation rigidity. Our method is agnostic to the model its training and updates the NN in-place. Furthermore, we show how boundary sensitivity helps to optimize and constrain objectives (such as surface area and volume), which are difficult to compute without first converting to another representation, such as a mesh.

@misc{berzins2023neural,

title={Neural Implicit Shape Editing using Boundary Sensitivity},

author={Arturs Berzins and Moritz Ibing and Leif Kobbelt},

year={2023},

eprint={2304.12951},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

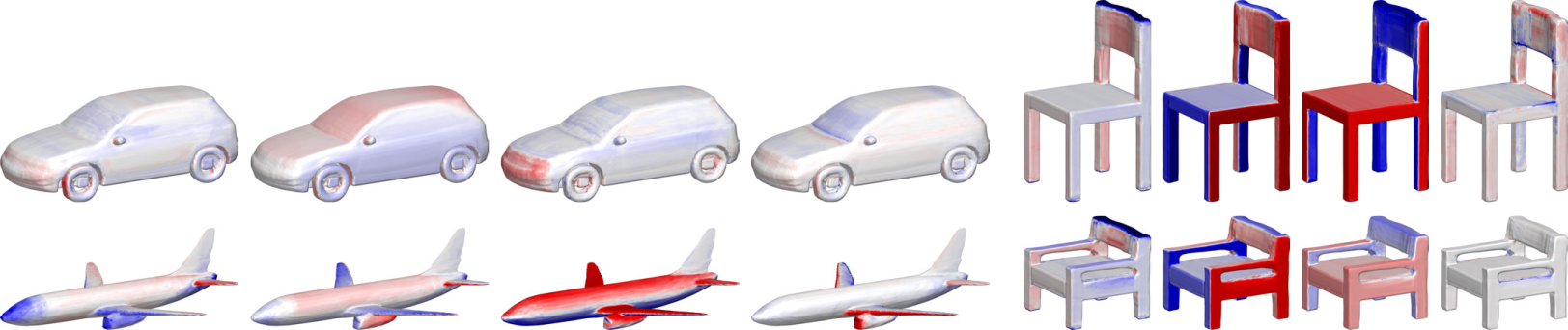

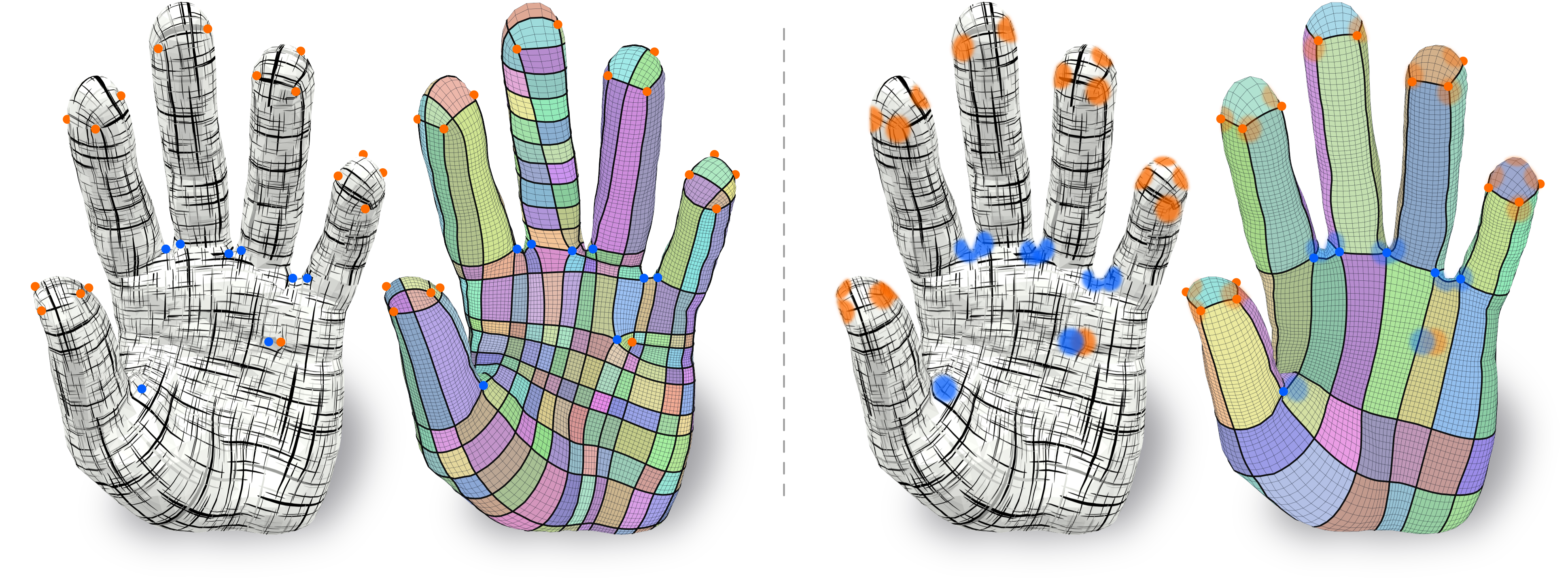

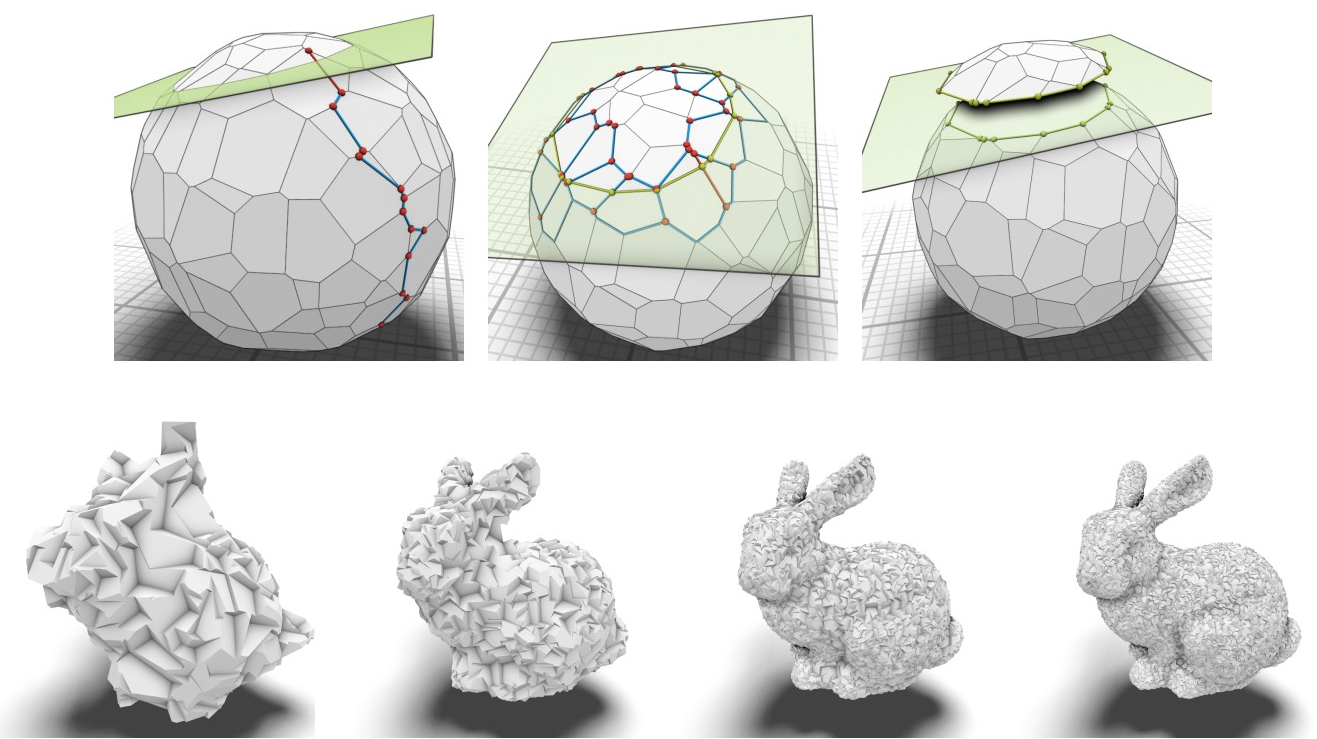

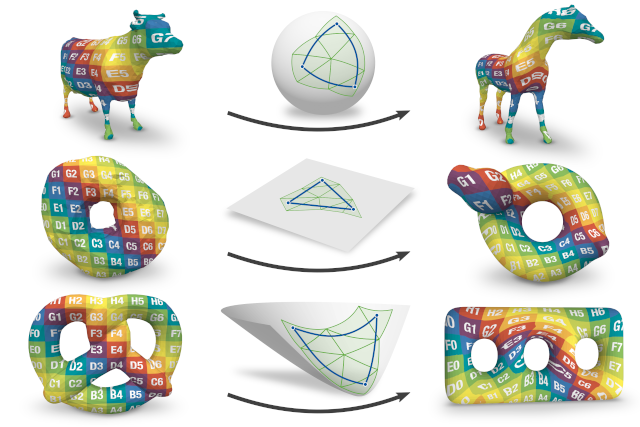

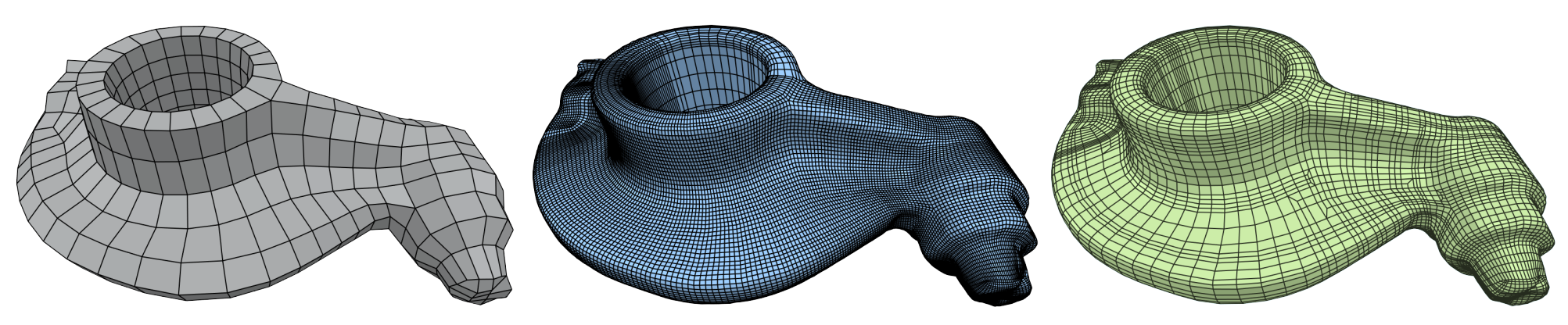

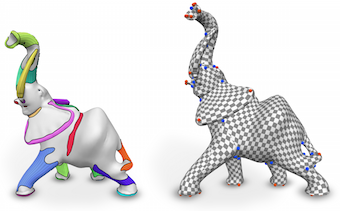

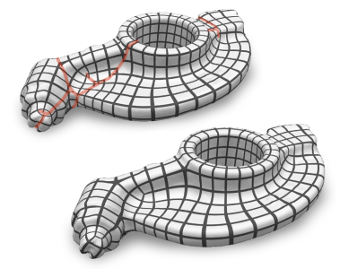

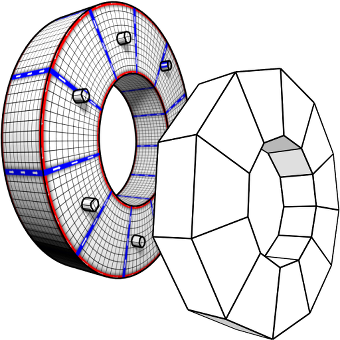

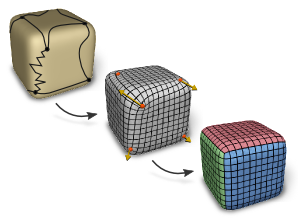

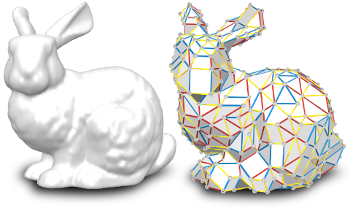

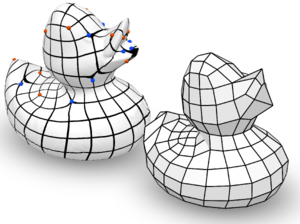

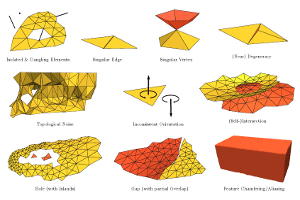

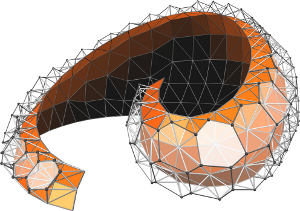

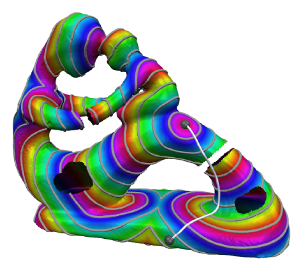

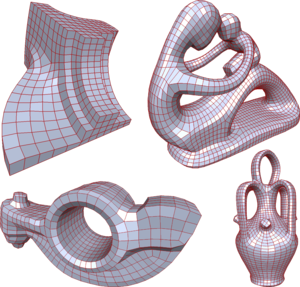

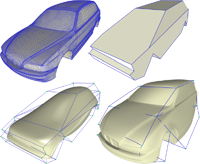

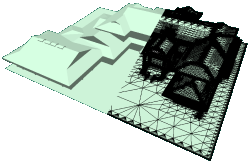

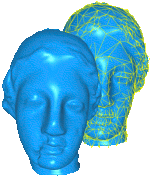

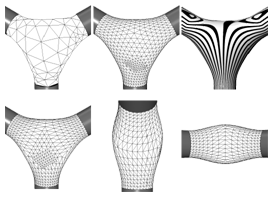

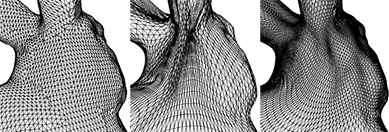

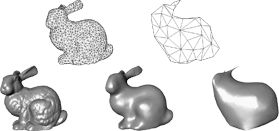

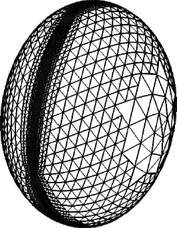

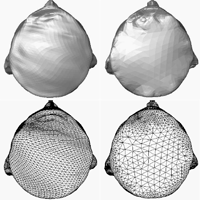

Surface Maps via Adaptive Triangulations

We present a new method to compute continuous and bijective maps (surface homeomorphisms) between two or more genus-0 triangle meshes. In contrast to previous approaches, we decouple the resolution at which a map is represented from the resolution of the input meshes. We discretize maps via common triangulations that approximate the input meshes while remaining in bijective correspondence to them. Both the geometry and the connectivity of these triangulations are optimized with respect to a single objective function that simultaneously controls mapping distortion, triangulation quality, and approximation error. A discrete-continuous optimization algorithm performs both energy-based remeshing as well as global second-order optimization of vertex positions, parametrized via the sphere. With this, we combine the disciplines of compatible remeshing and surface map optimization in a unified formulation and make a contribution in both fields. While existing compatible remeshing algorithms often operate on a fixed pre-computed surface map, we can now globally update this correspondence during remeshing. On the other hand, bijective surface-to-surface map optimization previously required computing costly overlay meshes that are inherently tied to the input mesh resolution. We achieve significant complexity reduction by instead assessing distortion between the approximating triangulations. This new map representation is inherently more robust than previous overlay-based approaches, is less intricate to implement, and naturally supports mapping between more than two surfaces. Moreover, it enables adaptive multi-resolution schemes that, e.g., first align corresponding surface regions at coarse resolutions before refining the map where needed. We demonstrate significant speedups and increased flexibility over state-of-the art mapping algorithms at similar map quality, and also provide a reference implementation of the method.

» Show BibTeX

@article{schmidt2023surface,

title={Surface Maps via Adaptive Triangulations},

author={Schmidt, Patrick and Pieper, D\"orte and Kobbelt, Leif},

year={2023},

journal={Computer Graphics Forum},

volume={42},

number={2},

}

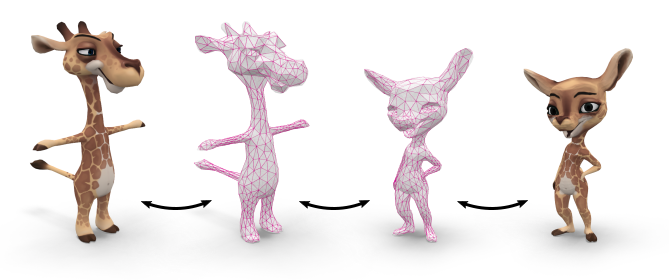

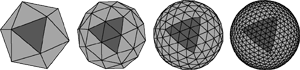

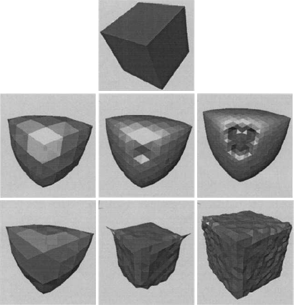

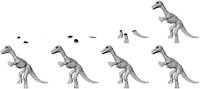

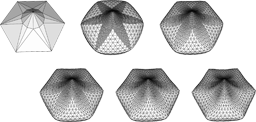

Octree Transformer: Autoregressive 3D Shape Generation on Hierarchically Structured Sequences

Autoregressive models have proven to be very powerful in NLP text generation tasks and lately have gained pop ularity for image generation as well. However, they have seen limited use for the synthesis of 3D shapes so far. This is mainly due to the lack of a straightforward way to linearize 3D data as well as to scaling problems with the length of the resulting sequences when describing complex shapes. In this work we address both of these problems. We use octrees as a compact hierarchical shape representation that can be sequentialized by traversal ordering. Moreover, we introduce an adaptive compression scheme, that significantly reduces sequence lengths and thus enables their effective generation with a transformer, while still allowing fully autoregressive sampling and parallel training. We demonstrate the performance of our model by performing superresolution and comparing against the state-of-the-art in shape generation.

@inproceedings{ibing_octree,

author = {Moritz Ibing and

Gregor Kobsik and

Leif Kobbelt},

title = {Octree Transformer: Autoregressive 3D Shape Generation on Hierarchically Structured Sequences},

booktitle = {{IEEE/CVF} Conference on Computer Vision and Pattern Recognition Workshops,

{CVPR} Workshops 2023},

publisher = {{IEEE}},

year = {2023},

}

Localized Latent Updates for Fine-Tuning Vision-Language Models

Although massive pre-trained vision-language models like CLIP show impressive generalization capabilities for many tasks, still it often remains necessary to fine-tune them for improved performance on specific datasets. When doing so, it is desirable that updating the model is fast and that the model does not lose its capabilities on data outside of the dataset, as is often the case with classical fine-tuning approaches. In this work we suggest a lightweight adapter that only updates the models predictions close to seen datapoints. We demonstrate the effectiveness and speed of this relatively simple approach in the context of few-shot learning, where our results both on classes seen and unseen during training are comparable with or improve on the state of the art.

@inproceedings{ibing_localized,

author = {Moritz Ibing and

Isaak Lim and

Leif Kobbelt},

title = {Localized Latent Updates for Fine-Tuning Vision-Language Models},

booktitle = {{IEEE/CVF} Conference on Computer Vision and Pattern Recognition Workshops,

{CVPR} Workshops 2023},

publisher = {{IEEE}},

year = {2023},

}

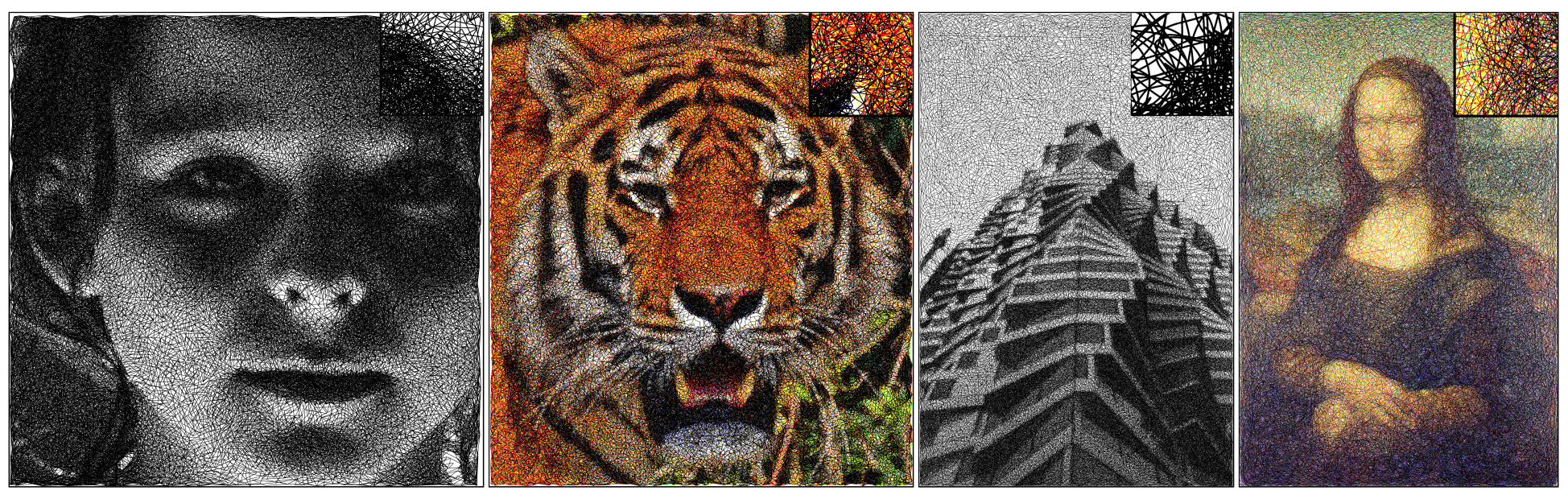

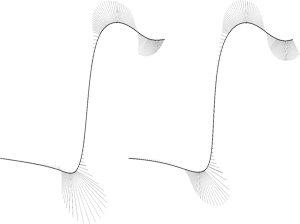

Greedy Image Approximation for Artwork Generation via Contiguous Bézier Segments

The automatic creation of digital art has a long history in computer graphics. In this work, we focus on approximating input images to mimic artwork by the artist Kumi Yamashita, as well as the popular scribble art style. Both have in common that the artists create the works by using a single, contiguous thread (Yamashita) or stroke (scribble) that is placed seemingly at random when viewed at close range, but perceived as a tone-mapped picture when viewed from a distance. Our approach takes a rasterized image as input and creates a single, connected path by iteratively sampling a set of candidate segments that extend the current path and greedily selecting the best one. The candidates are sampled according to art style specific constraints, i.e. conforming to continuity constraints in the mathematical sense for the scribble art style. To model the perceptual discrepancy between close and far viewing distances, we minimize the difference between the input image and the image created by rasterizing our path after applying the contrast sensitivity function, which models how human vision blurs images when viewed from a distance. Our approach generalizes to colored images by using one path per color. We evaluate our approach on a wide range of input images and show that it is able to achieve good results for both art styles in grayscale and color.

@inproceedings{nehringwirxel2023greedy,

title={Greedy Image Approximation for Artwork Generation via Contiguous B{\'{e}}zier Segments},

author={Nehring-Wirxel, Julius and Lim, Isaak and Kobbelt, Leif},

booktitle={28th International Symposium on Vision, Modeling, and Visualization, VMV 2023},

year={2023}

}

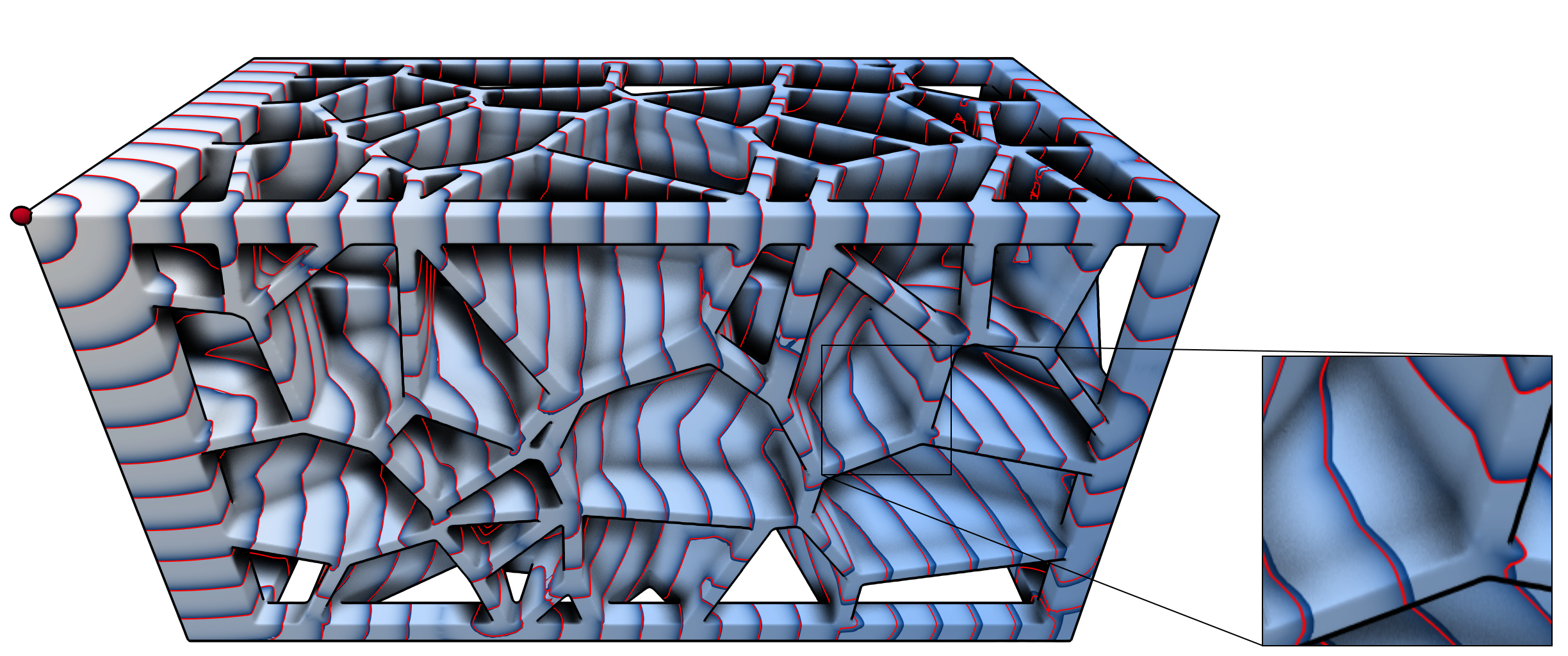

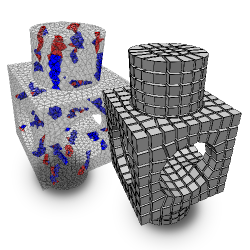

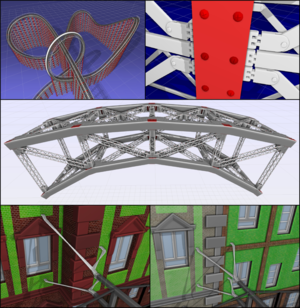

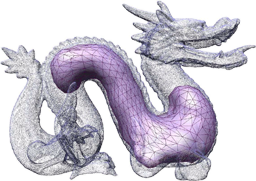

EMBER: Exact Mesh Booleans via Efficient & Robust Local Arrangements

Boolean operators are an essential tool in a wide range of geometry processing and CAD/CAM tasks. We present a novel method, EMBER, to compute Boolean operations on polygon meshes which is exact, reliable, and highly performant at the same time. Exactness is guaranteed by using a plane-based representation for the input meshes along with recently introduced homogeneous integer coordinates. Reliability and robustness emerge from a formulation of the algorithm via generalized winding numbers and mesh arrangements. High performance is achieved by avoiding the (pre-)construction of a global acceleration structure. Instead, our algorithm performs an adaptive recursive subdivision of the scene’s bounding box while generating and tracking all required data on the fly. By leveraging a number of early-out termination criteria, we can avoid the generation and inspection of regions that do not contribute to the output. With a careful implementation and a work-stealing multi-threading architecture, we are able to compute Boolean operations between meshes with millions of triangles at interactive rates. We run an extensive evaluation on the Thingi10K dataset to demonstrate that our method outperforms state-of-the-art algorithms, even inexact ones like QuickCSG, by orders of magnitude.

If you are interested in a binary implementation including various additional features, please contact the authors. Contact: trettner@shapedcode.com

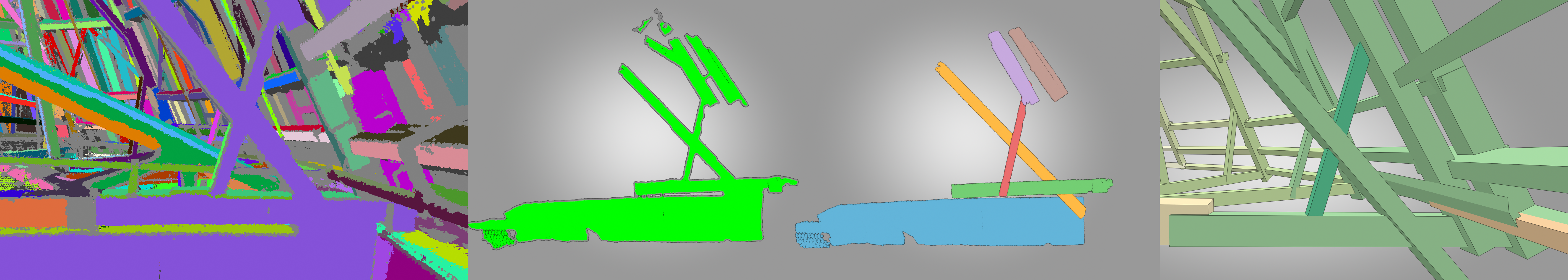

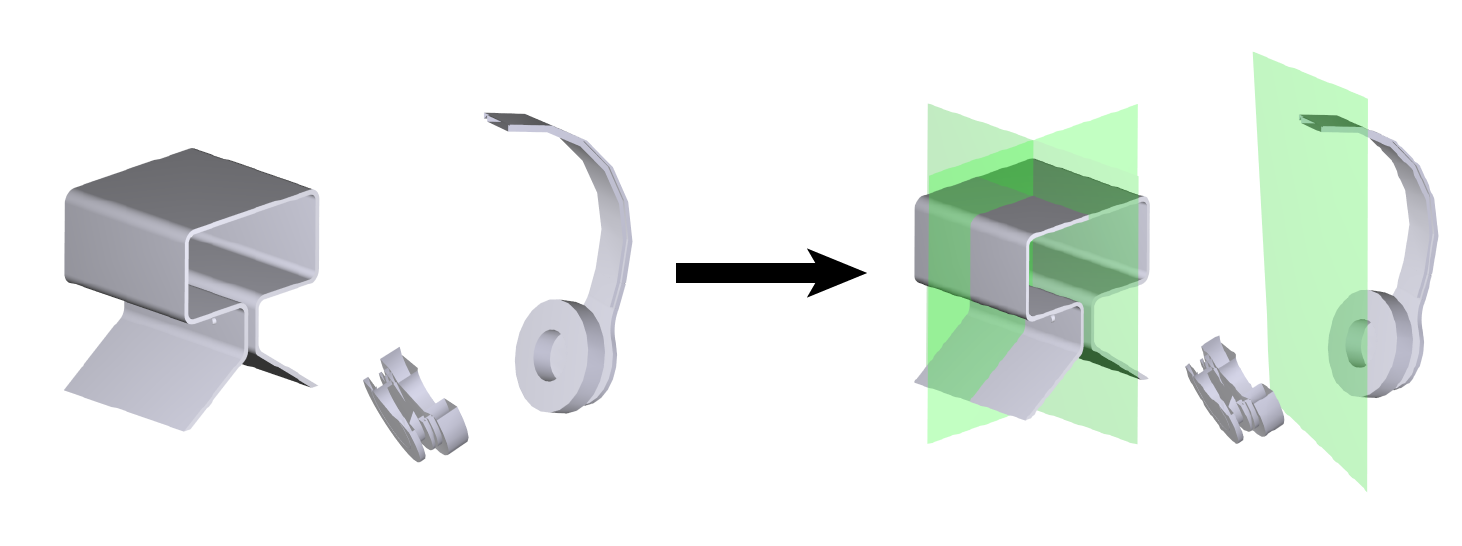

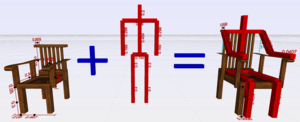

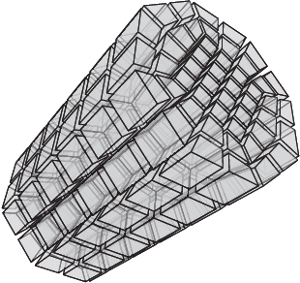

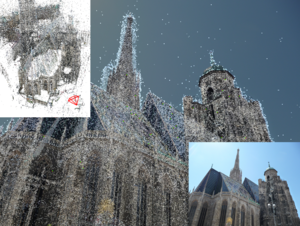

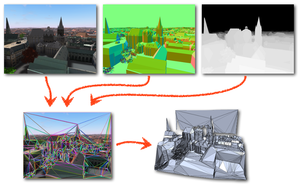

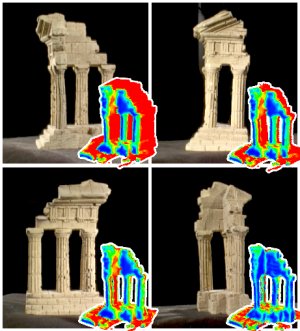

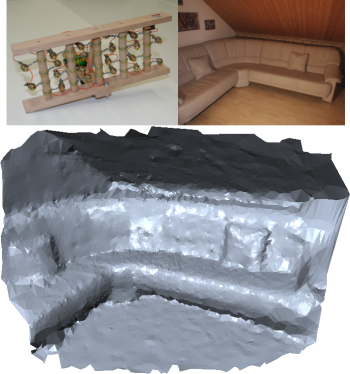

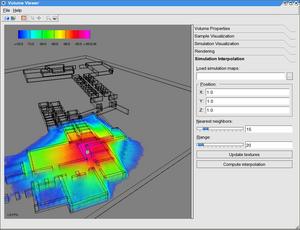

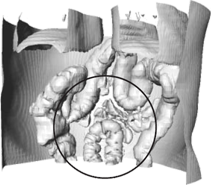

Scan2FEM: From Point Clouds to Structured 3D Models Suitable for Simulation

Preservation of cultural heritage is important to prevent singular objects or sites of cultural importance to decay. One aspect of preservation is the creation of a digital twin. In case of a catastrophic event, this twin can be used to support repairs or reconstruction, in order to stay faithful to the original object or site. Certain activities in prolongation of such an objects lifetime may involve adding or replacing structural support elements to prevent a collapse. We propose an automatic method that is capable of transforming a point cloud into a geometric representation that is suitable for structural analysis. We robustly find cuboids and their connections in a point cloud to approximate the wooden beam structure contained inside. We export the necessary information to perform structural analysis, on the example of the timber attic of the UNESCO World Heritage Aachen Cathedral. We provide evaluation of the resulting cuboids’ quality and show how a user can interactively refine the cuboids in order to improve the approximated model, and consequently the simulation results.

@inproceedings {10.2312:gch.20221215,

booktitle = {Eurographics Workshop on Graphics and Cultural Heritage},

editor = {Ponchio, Federico and Pintus, Ruggero},

title = {{Scan2FEM: From Point Clouds to Structured 3D Models Suitable for Simulation}},

author = {Selman, Zain and Musto, Juan and Kobbelt, Leif},

year = {2022},

publisher = {The Eurographics Association},

ISSN = {2312-6124},

ISBN = {978-3-03868-178-6},

DOI = {10.2312/gch.20221215}

}

TinyAD: Automatic Differentiation in Geometry Processing Made Simple

Non-linear optimization is essential to many areas of geometry processing research. However, when experimenting with different problem formulations or when prototyping new algorithms, a major practical obstacle is the need to figure out derivatives of objective functions, especially when second-order derivatives are required. Deriving and manually implementing gradients and Hessians is both time-consuming and error-prone. Automatic differentiation techniques address this problem, but can introduce a diverse set of obstacles themselves, e.g. limiting the set of supported language features, imposing restrictions on a program's control flow, incurring a significant run time overhead, or making it hard to exploit sparsity patterns common in geometry processing. We show that for many geometric problems, in particular on meshes, the simplest form of forward-mode automatic differentiation is not only the most flexible, but also actually the most efficient choice. We introduce TinyAD: a lightweight C++ library that automatically computes gradients and Hessians, in particular of sparse problems, by differentiating small (tiny) sub-problems. Its simplicity enables easy integration; no restrictions on, e.g., looping and branching are imposed. TinyAD provides the basic ingredients to quickly implement first and second order Newton-style solvers, allowing for flexible adjustment of both problem formulations and solver details. By showcasing compact implementations of methods from parametrization, deformation, and direction field design, we demonstrate how TinyAD lowers the barrier to exploring non-linear optimization techniques. This enables not only fast prototyping of new research ideas, but also improves replicability of existing algorithms in geometry processing. TinyAD is available to the community as an open source library.

- Best Paper Award (1st place) at SGP 2022

- Graphics Replicability Stamp

» Show BibTeX

@article{schmidt2022tinyad,

title={{TinyAD}: Automatic Differentiation in Geometry Processing Made Simple},

author={Schmidt, Patrick and Born, Janis and Bommes, David and Campen, Marcel and Kobbelt, Leif},

year={2022},

journal={Computer Graphics Forum},

volume={41},

number={5},

}

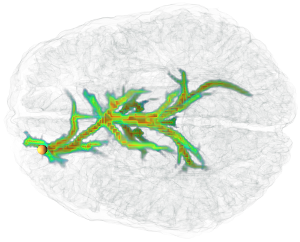

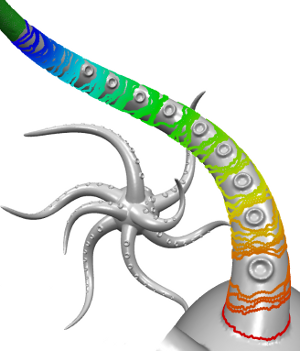

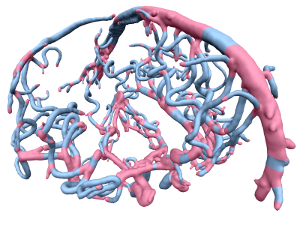

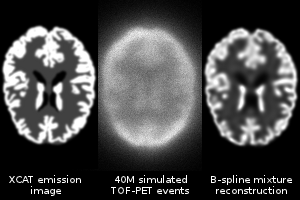

Pseudodynamic analysis of heart tube formation in the mouse reveals strong regional variability and early left–right asymmetry

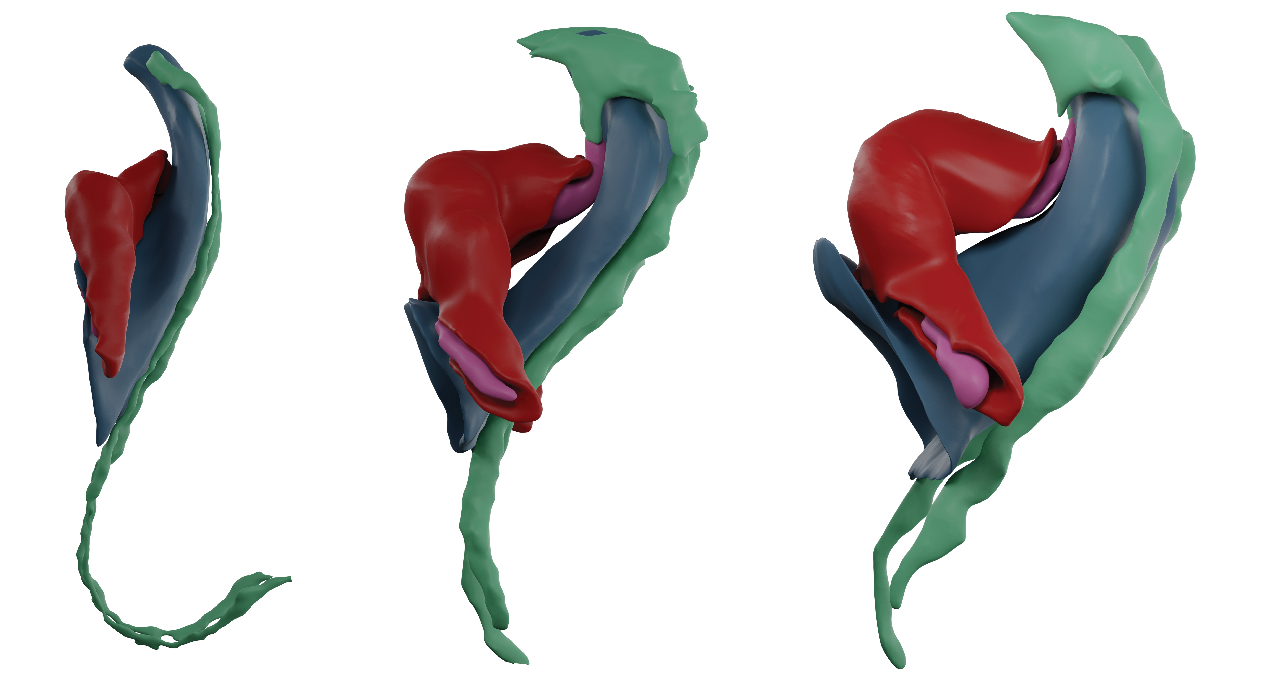

Understanding organ morphogenesis requires a precise geometrical description of the tissues involved in the process. The high morphological variability in mammalian embryos hinders the quantitative analysis of organogenesis. In particular, the study of early heart development in mammals remains a challenging problem due to imaging limitations and complexity. Here, we provide a complete morphological description of mammalian heart tube formation based on detailed imaging of a temporally dense collection of mouse embryonic hearts. We develop strategies for morphometric staging and quantification of local morphological variations between specimens. We identify hot spots of regionalized variability and identify Nodal-controlled left–right asymmetry of the inflow tracts as the earliest signs of organ left–right asymmetry in the mammalian embryo. Finally, we generate a three-dimensional+t digital model that allows co-representation of data from different sources and provides a framework for the computer modeling of heart tube formation.

» Show BibTeX

@article{esteban2022pseudodynamic,

author = {Esteban, Isaac and Schmidt, Patrick and Desgrange, Audrey and Raiola, Morena and Temi{\~n}o, Susana and Meilhac, Sigol\`{e}ne M. and Kobbelt, Leif and Torres, Miguel},

title = {Pseudo-dynamic analysis of heart tube formation in the mouse reveals strong regional variability and early left-right asymmetry},

year = {2022},

journal = {Nature Cardiovascular Research},

volume = 1,

number = 5

}

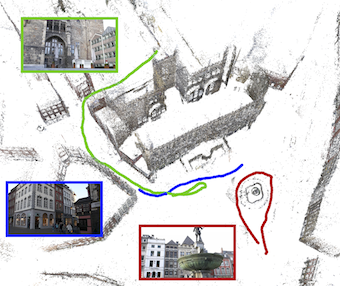

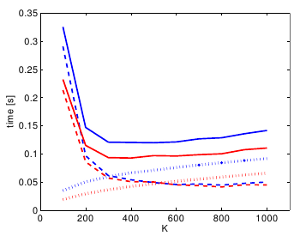

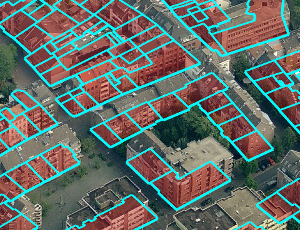

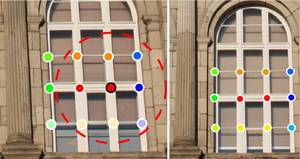

Interactive Segmentation of Textured Point Clouds

We present a method for the interactive segmentation of textured 3D point clouds. The problem is formulated as a minimum graph cut on a k-nearest neighbor graph and leverages the rich information contained in high-resolution photographs as the discriminative feature. We demonstrate that the achievable segmentation accuracy is significantly improved compared to using an average color per point as in prior work. The method is designed to work efficiently on large datasets and yields results at interactive rates. This way, an interactive workflow can be realized in an immersive virtual environment, which supports the segmentation task by improved depth perception and the use of tracked 3D input devices. Our method enables to create high-quality segmentations of textured point clouds fast and conveniently.

» Show BibTeX

@inproceedings {10.2312:vmv.20221200,

booktitle = {Vision, Modeling, and Visualization},

editor = {Bender, Jan and Botsch, Mario and Keim, Daniel A.},

title = {{Interactive Segmentation of Textured Point Clouds}},

author = {Schmitz, Patric and Suder, Sebastian and Schuster, Kersten and Kobbelt, Leif},

year = {2022},

publisher = {The Eurographics Association},

ISBN = {978-3-03868-189-2},

DOI = {10.2312/vmv.20221200}

}

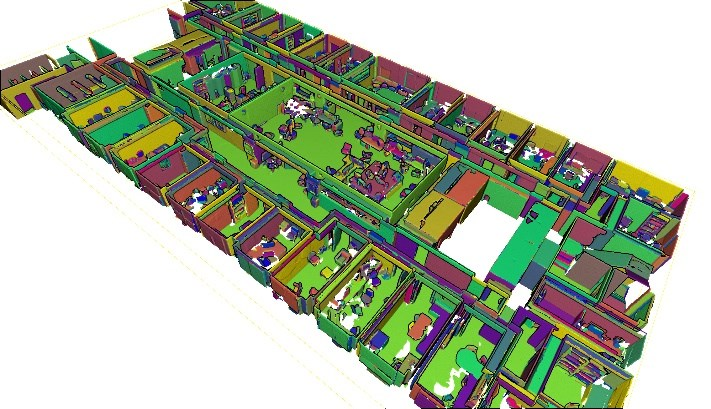

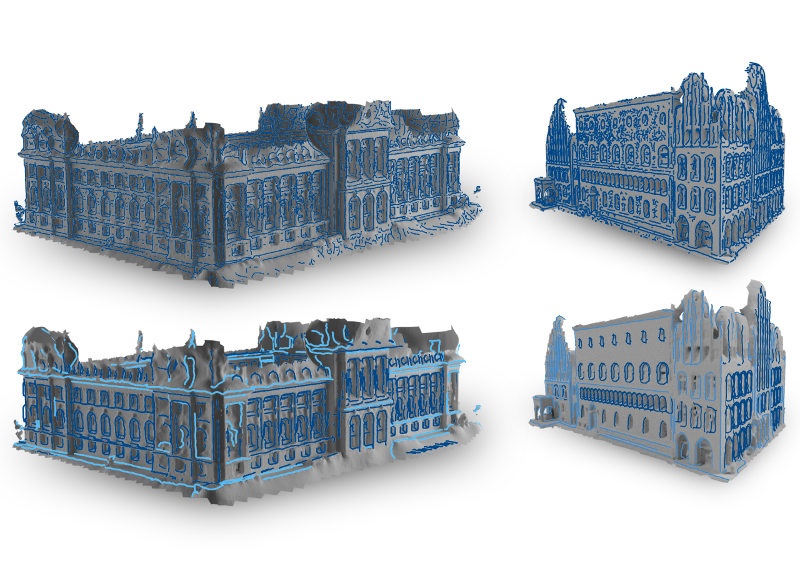

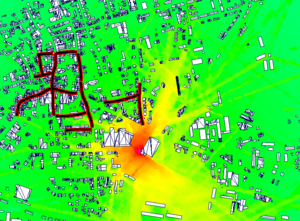

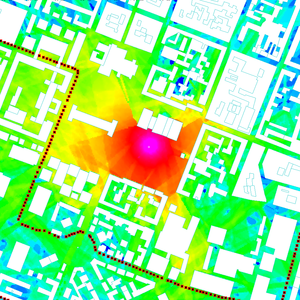

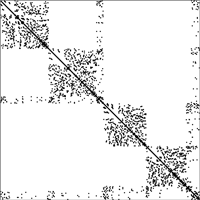

Automatic region-growing system for the segmentation of large point clouds

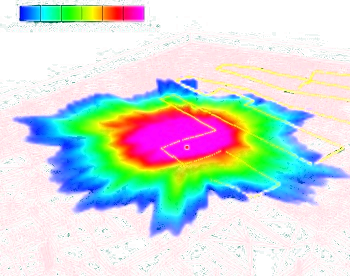

This article describes a complete unsupervised system for the segmentation of massive 3D point clouds. Our system bridges the missing components that permit to go from 99% automation to 100% automation for the construction industry. It scales up to billions of 3D points and targets a generic low-level grouping of planar regions usable by a wide range of applications. Furthermore, we introduce a hierarchical multi-level segment definition to cope with potential variations in high-level object definitions. The approach first leverages planar predominance in scenes through a normal-based region growing. Then, for usability and simplicity, we designed an automatic heuristic to determine without user supervision three RANSAC-inspired parameters. These are the distance threshold for the region growing, the threshold for the minimum number of points needed to form a valid planar region, and the decision criterion for adding points to a region. Our experiments are conducted on 3D scans of complex buildings to test the robustness of the “one-click” method in varying scenarios. Labelled and instantiated point clouds from different sensors and platforms (depth sensor, terrestrial laser scanner, hand-held laser scanner, mobile mapping system), in different environments (indoor, outdoor, buildings) and with different objects of interests (AEC-related, BIM-related, navigation-related) are provided as a new extensive test-bench. The current implementation processes ten million points per minutes on a single thread CPU configuration. Moreover, the resulting segments are tested for the high-level task of semantic segmentation over 14 classes, to achieve an F1-score of 90+ averaged over all datasets while reducing the training phase to a fraction of state of the art point-based deep learning methods. We provide this baseline along with six new open-access datasets with 300+ million hand-labelled and instantiated 3D points at: https://www.graphics.rwth-aachen.de/project/ 45/.

@article{POUX2022104250,

title = {Automatic region-growing system for the segmentation of large point clouds},

journal = {Automation in Construction},

volume = {138},

pages = {104250},

year = {2022},

issn = {0926-5805},

doi = {https://doi.org/10.1016/j.autcon.2022.104250},

url = {https://www.sciencedirect.com/science/article/pii/S0926580522001236},

author = {F. Poux and C. Mattes and Z. Selman and L. Kobbelt},

keywords = {3D point cloud, Segmentation, Region-growing, RANSAC, Unsupervised clustering}

}

3D Shape Generation with Grid-based Implicit Functions

Previous approaches to generate shapes in a 3D setting train a GAN on the latent space of an autoencoder (AE). Even though this produces convincing results, it has two major shortcomings. As the GAN is limited to reproduce the dataset the AE was trained on, we cannot reuse a trained AE for novel data. Furthermore, it is difficult to add spatial supervision into the generation process, as the AE only gives us a global representation. To remedy these issues, we propose to train the GAN on grids (i.e. each cell covers a part of a shape). In this representation each cell is equipped with a latent vector provided by an AE. This localized representation enables more expressiveness (since the cell-based latent vectors can be combined in novel ways) as well as spatial control of the generation process (e.g. via bounding boxes). Our method outperforms the current state of the art on all established evaluation measures, proposed for quantitatively evaluating the generative capabilities of GANs. We show limitations of these measures and propose the adaptation of a robust criterion from statistical analysis as an alternative.

@inproceedings {ibing20213Dshape,

title = {3D Shape Generation with Grid-based Implicit Functions},

author = {Ibing, Moritz and Lim, Isaak and Kobbelt, Leif},

booktitle = {IEEE Computer Vision and Pattern Recognition (CVPR)},

pages = {},

year = {2021}

}

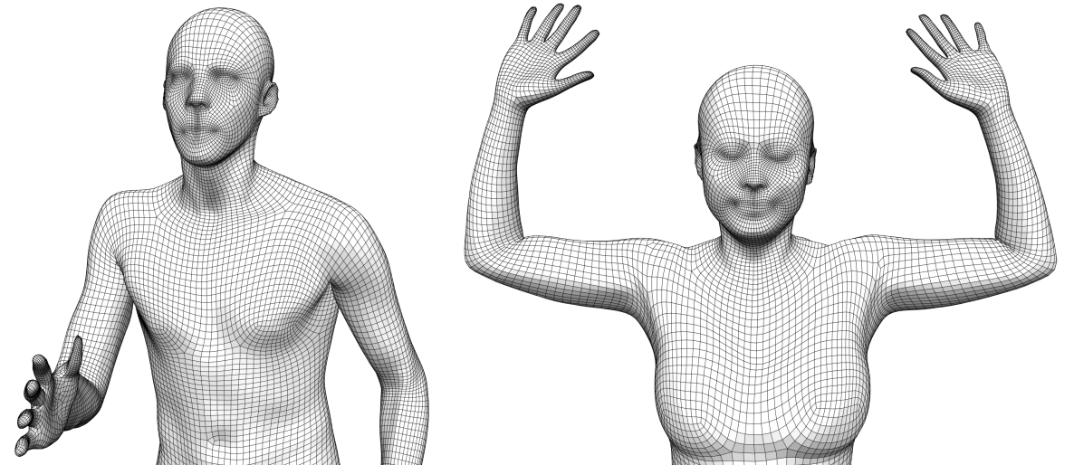

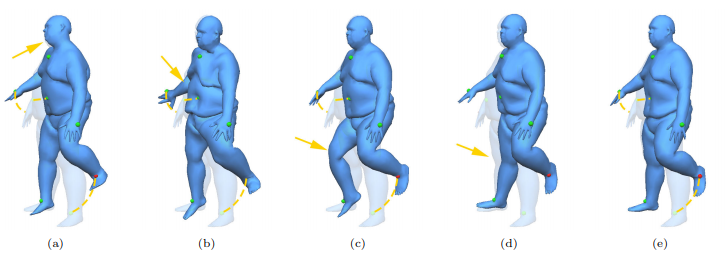

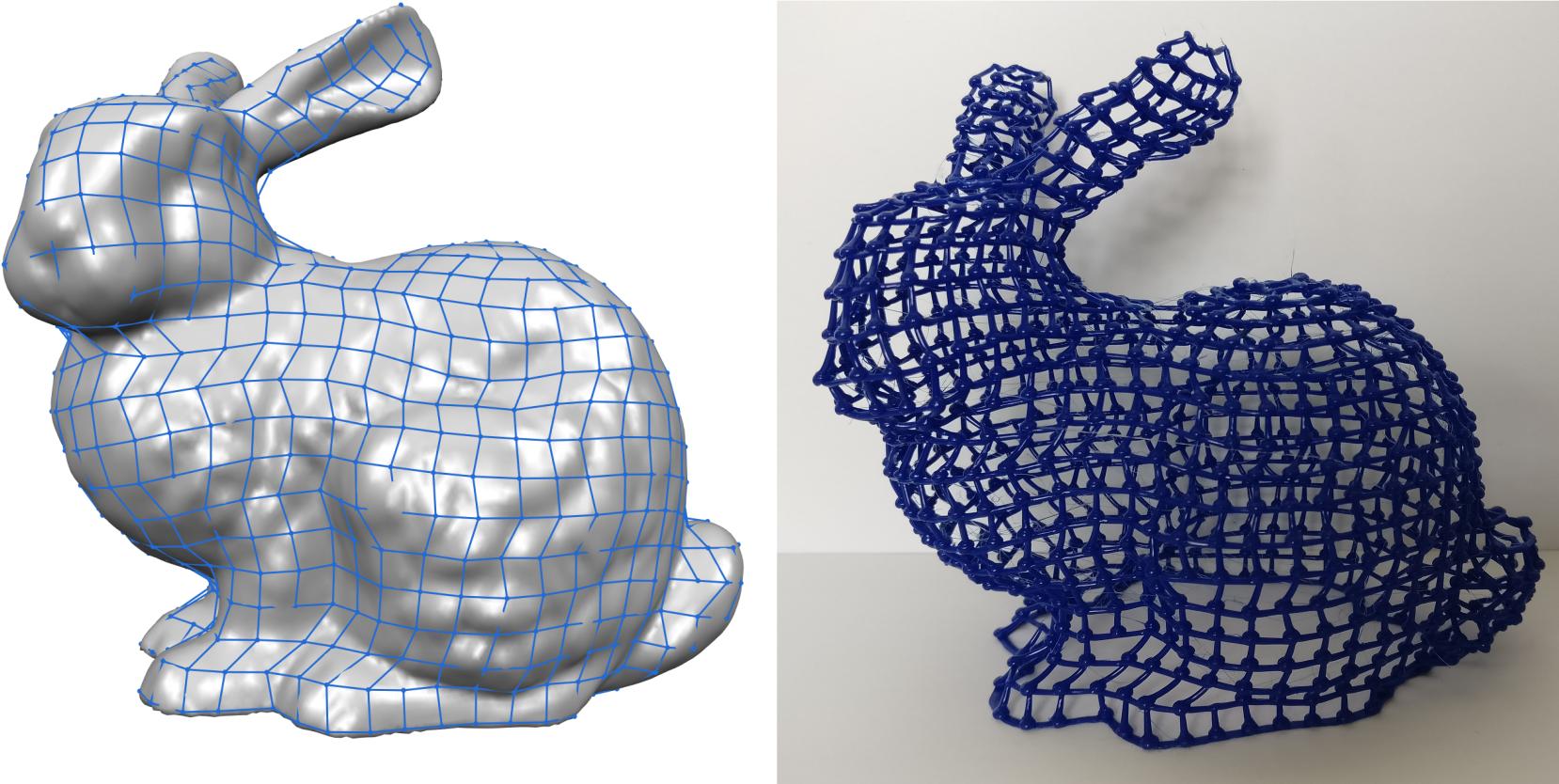

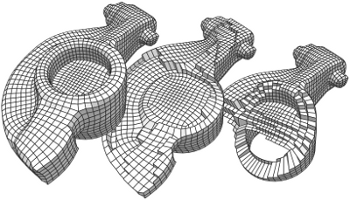

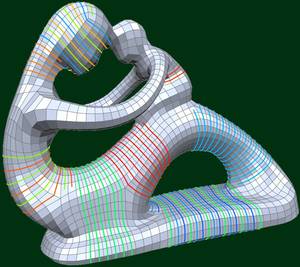

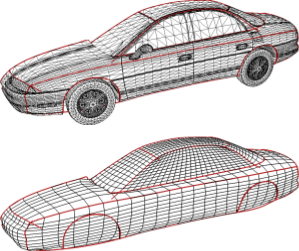

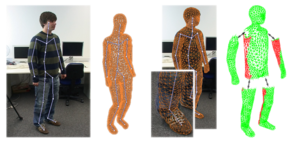

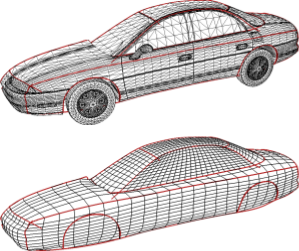

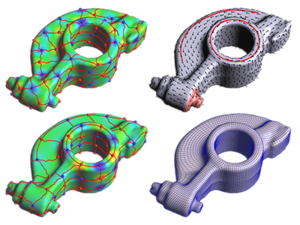

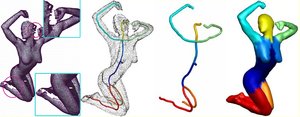

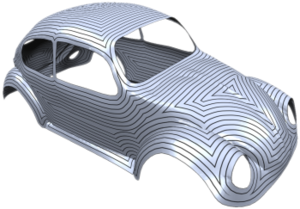

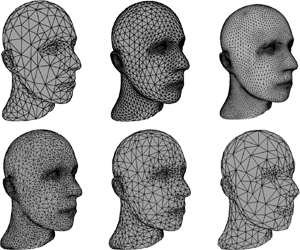

Learning Direction Fields for Quad Mesh Generation

State of the art quadrangulation methods are able to reliably and robustly convert triangle meshes into quad meshes. Most of these methods rely on a dense direction field that is used to align a parametrization from which a quad mesh can be extracted. In this context, the aforementioned direction field is of particular importance, as it plays a key role in determining the structure of the generated quad mesh. If there are no user-provided directions available, the direction field is usually interpolated from a subset of principal curvature directions. To this end, a number of heuristics that aim to identify significant surface regions have been proposed. Unfortunately, the resulting fields often fail to capture the structure found in meshes created by human experts. This is due to the fact that experienced designers can leverage their domain knowledge in order to optimize a mesh for a specific application. In the context of physics simulation, for example, a designer might prefer an alignment and local refinement that facilitates a more accurate numerical simulation. Similarly, a character artist may prefer an alignment that makes the resulting mesh easier to animate. Crucially, this higher level domain knowledge cannot be easily extracted from local curvature information alone. Motivated by this issue, we propose a data-driven approach to the computation of direction fields that allows us to mimic the structure found in existing meshes, which could originate from human experts or other sources. More specifically, we make use of a neural network that aggregates global and local shape information in order to compute a direction field that can be used to guide a parametrization-based quad meshing method. Our approach is a first step towards addressing this challenging problem with a fully automatic learning-based method. We show that compared to classical techniques our data-driven approach combined with a robust model-driven method, is able to produce results that more closely exhibit the ground truth structure of a synthetic dataset (i.e. a manually designed quad mesh template fitted to a variety of human body types in a set of different poses).

@article{dielen2021learning_direction_fields,

title={Learning Direction Fields for Quad Mesh Generation},

author={Dielen, Alexander and Lim, Isaak and Lyon, Max and Kobbelt, Leif},

year={2021},

journal={Computer Graphics Forum},

volume={40},

number={5},

}

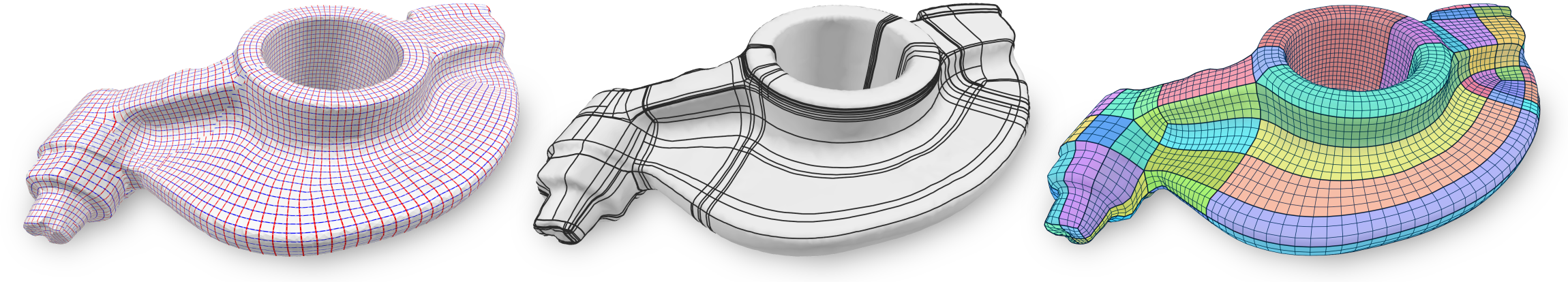

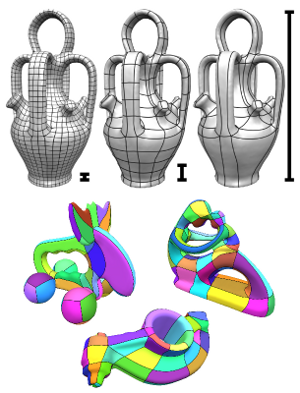

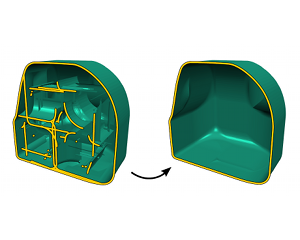

Simpler Quad Layouts using Relaxed Singularities

A common approach to automatic quad layout generation on surfaces is to, in a first stage, decide on the positioning of irregular layout vertices, followed by finding sensible layout edges connecting these vertices and partitioning the surface into quadrilateral patches in a second stage. While this two-step approach reduces the problem's complexity, this separation also limits the result quality. In the worst case, the set of layout vertices fixed in the first stage without consideration of the second may not even permit a valid quad layout. We propose an algorithm for the creation of quad layouts in which the initial layout vertices can be adjusted in the second stage. Whenever beneficial for layout quality or even validity, these vertices may be moved within a prescribed radius or even be removed. Our algorithm is based on a robust quantization strategy, turning a continuous T-mesh structure into a discrete layout. We show the effectiveness of our algorithm on a variety of inputs.

» Show BibTeX

@article{lyon2021simplerlayouts,

title={Simpler Quad Layouts using Relaxed Singularities},

author={Lyon, Max and Campen, Marcel and Kobbelt, Leif},

year={2021},

journal={Computer Graphics Forum},

volume={40},

number={5},

}

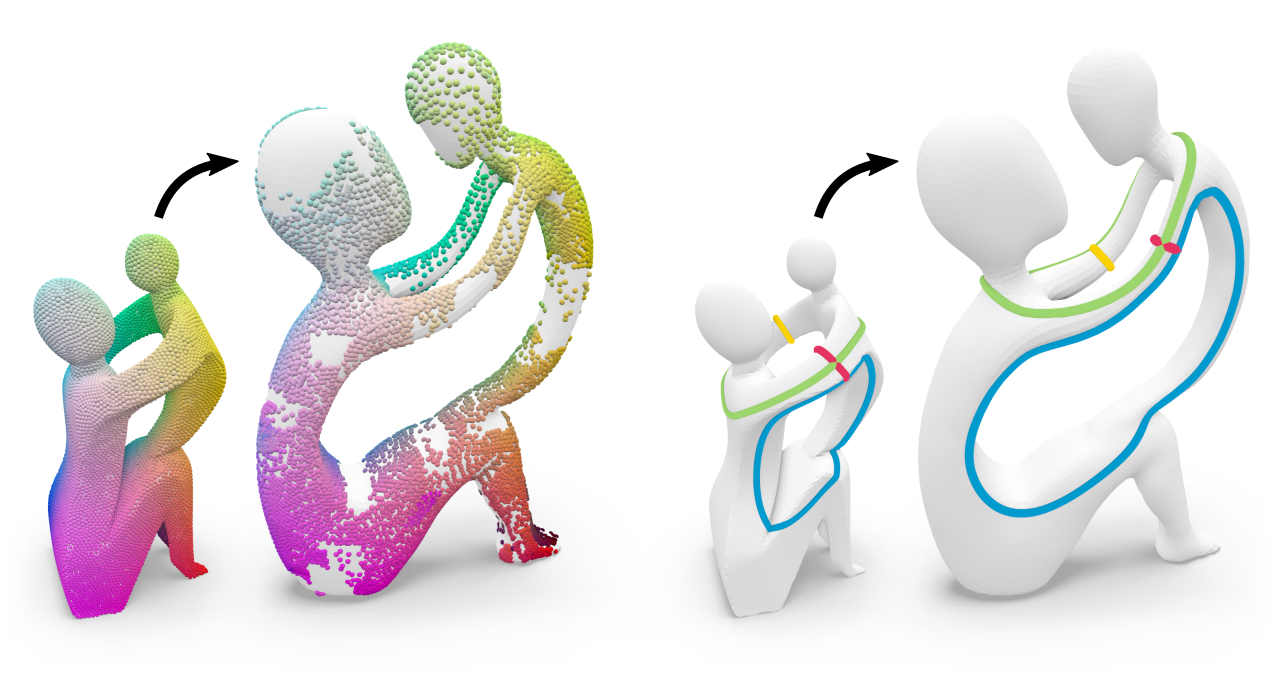

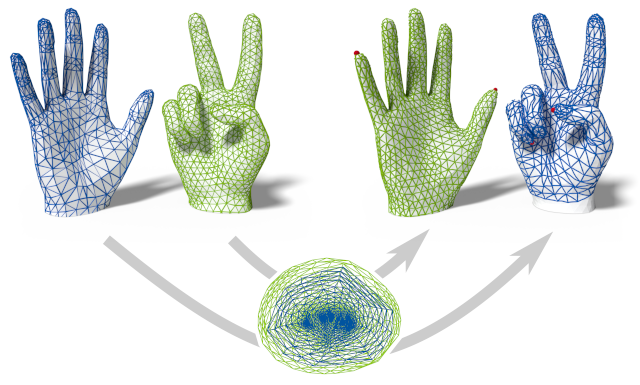

Surface Map Homology Inference

A homeomorphism between two surfaces not only defines a (continuous and bijective) geometric correspondence of points but also (by implication) an identification of topological features, i.e. handles and tunnels, and how the map twists around them. However, in practice, surface maps are often encoded via sparse correspondences or fuzzy representations that merely approximate a homeomorphism and are therefore inherently ambiguous about map topology. In this work, we show a way to infer topological information from an imperfect input map between two shapes. In particular, we compute a homology map, a linear map that transports homology classes of cycles from one surface to the other, subject to a global consistency constraint. Our inference robustly handles imperfect (e.g., partial, sparse, fuzzy, noisy, outlier-ridden, non-injective) input maps and is guaranteed to produce homology maps that are compatible with true homeomorphisms between the input shapes. Homology maps inferred by our method can be directly used to transfer homological information between shapes, or serve as foundation for the construction of a proper homeomorphism guided by the input map, e.g., via compatible surface decomposition.

- Best Paper Award at SGP 2021

- Graphics Replicability Stamp

» Show BibTeX

@article{born2021surface,

title={Surface Map Homology Inference},

author={Born, Janis and Schmidt, Patrick and Campen, Marcel and Kobbelt, Leif},

year={2021},

journal={Computer Graphics Forum},

volume={40},

number={5},

}

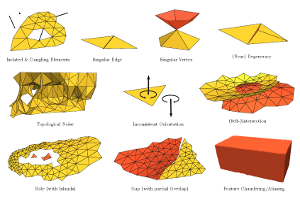

Geodesic Distance Computation via Virtual Source Propagation

We present a highly practical, efficient, and versatile approach for computing approximate geodesic distances. The method is designed to operate on triangle meshes and a set of point sources on the surface. We also show extensions for all kinds of geometric input including inconsistent triangle soups and point clouds, as well as other source types, such as lines. The algorithm is based on the propagation of virtual sources and hence easy to implement. We extensively evaluate our method on about 10000 meshes taken from the Thingi10k and the Tet Meshing in the Wild data sets. Our approach clearly outperforms previous approximate methods in terms of runtime efficiency and accuracy. Through careful implementation and cache optimization, we achieve runtimes comparable to other elementary mesh operations (e.g. smoothing, curvature estimation) such that geodesic distances become a "first-class citizen" in the toolbox of geometric operations. Our method can be parallelized and we observe up to 6× speed-up on the CPU and 20× on the GPU. We present a number of mesh processing tasks easily implemented on the basis of fast geodesic distances. The source code of our method will be provided as a C++ library under the MIT license.

Note: we are currently in the process of cleaning up and documenting the source code. A basic implementation can already be found in the supplemental material.

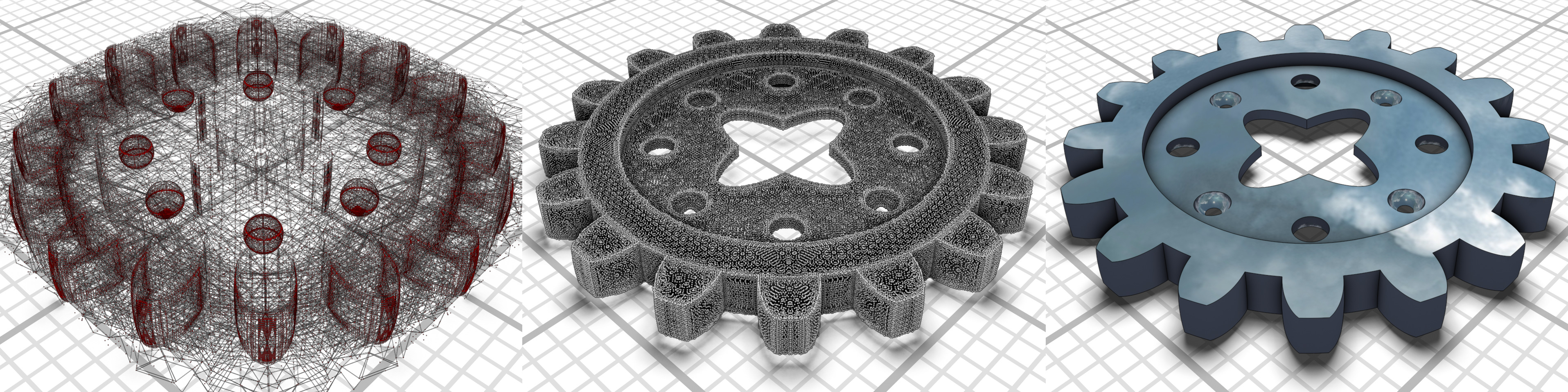

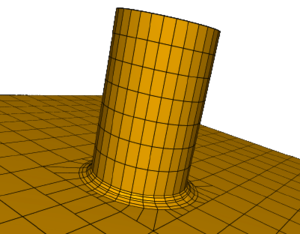

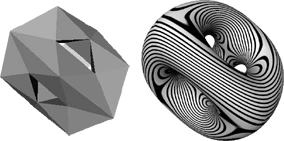

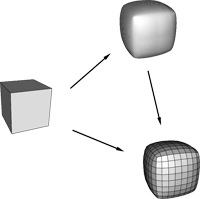

Sampling from Quadric-Based CSG Surfaces

We present an efficient method to create samples directly on surfaces defined by constructive solid geometry (CSG) trees or graphs. The generated samples can be used for visualization or as an approximation to the actual surface with strong guarantees. We chose to use quadric surfaces as CSG primitives as they can model classical primitives such as planes, cubes, spheres, cylinders, and ellipsoids, but also certain saddle surfaces. More importantly, they are closed under affine transformations, a desirable property for a modeling system. We also propose a rendering method that performs local quadric ray-tracing and clipping to achieve pixel-perfect accuracy and hole-free rendering.

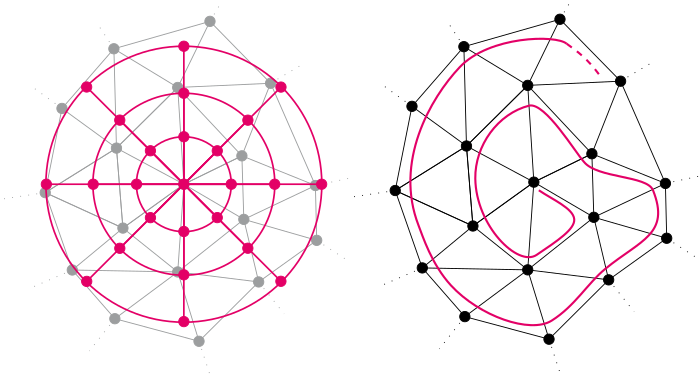

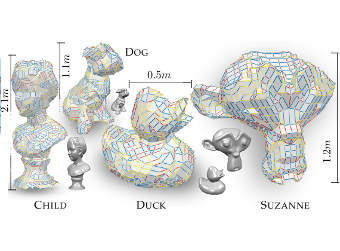

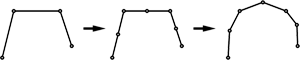

Layout Embedding via Combinatorial Optimization

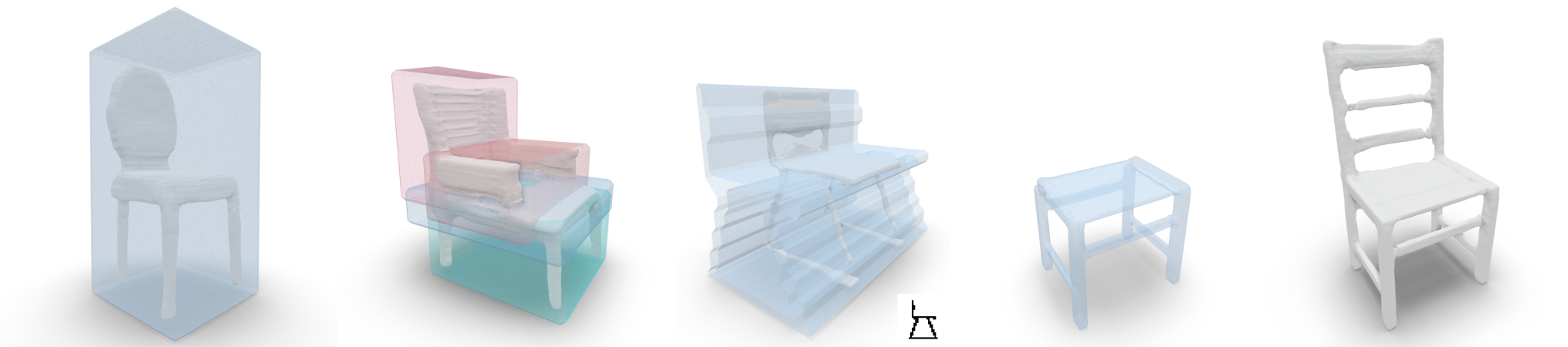

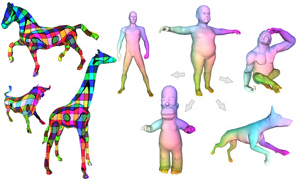

We consider the problem of injectively embedding a given graph connectivity (a layout) into a target surface. Starting from prescribed positions of layout vertices, the task is to embed all layout edges as intersection-free paths on the surface. Besides merely geometric choices (the shape of paths) this problem is especially challenging due to its topological degrees of freedom (how to route paths around layout vertices). The problem is typically addressed through a sequence of shortest path insertions, ordered by a greedy heuristic. Such insertion sequences are not guaranteed to be optimal: Early path insertions can potentially force later paths into unexpected homotopy classes. We show how common greedy methods can easily produce embeddings of dramatically bad quality, rendering such methods unsuitable for automatic processing pipelines. Instead, we strive to find the optimal order of insertions, i.e. the one that minimizes the total path length of the embedding. We demonstrate that, despite the vast combinatorial solution space, this problem can be effectively solved on simply-connected domains via a custom-tailored branch-and-bound strategy. This enables directly using the resulting embeddings in downstream applications which cannot recover from initializations in a wrong homotopy class. We demonstrate the robustness of our method on a shape dataset by embedding a common template layout per category, and show applications in quad meshing and inter-surface mapping.

» Show BibTeX

@article{born2021layout,

title={Layout Embedding via Combinatorial Optimization},

author={Born, Janis and Schmidt, Patrick and Kobbelt, Leif},

year={2021},

journal={Computer Graphics Forum},

volume={40},

number={2},

}

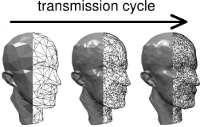

Compression and Rendering of Textured Point Clouds via Sparse Coding

Splat-based rendering techniques produce highly realistic renderings from 3D scan data without prior mesh generation. Mapping high-resolution photographs to the splat primitives enables detailed reproduction of surface appearance. However, in many cases these massive datasets do not fit into GPU memory. In this paper, we present a compression and rendering method that is designed for large textured point cloud datasets. Our goal is to achieve compression ratios that outperform generic texture compression algorithms, while still retaining the ability to efficiently render without prior decompression. To achieve this, we resample the input textures by projecting them onto the splats and create a fixed-size representation that can be approximated by a sparse dictionary coding scheme. Each splat has a variable number of codeword indices and associated weights, which define the final texture as a linear combination during rendering. For further reduction of the memory footprint, we compress geometric attributes by careful clustering and quantization of local neighborhoods. Our approach reduces the memory requirements of textured point clouds by one order of magnitude, while retaining the possibility to efficiently render the compressed data.

Quad Layouts via Constrained T-Mesh Quantization

We present a robust and fast method for the creation of conforming quad layouts on surfaces. Our algorithm is based on the quantization of a T-mesh, i.e. an assignment of integer lengths to the sides of a non-conforming rectangular partition of the surface. This representation has the benefit of being able to encode an infinite number of layout connectivity options in a finite manner, which guarantees that a valid layout can always be found. We carefully construct the T-mesh from a given seamless parametrization such that the algorithm can provide guarantees on the results' quality. In particular, the user can specify a bound on the angular deviation of layout edges from prescribed directions. We solve an integer linear program (ILP) to find a coarse quad layout adhering to that maximal deviation. Our algorithm is guaranteed to yield a conforming quad layout free of T-junctions together with bounded angle distortion. Our results show that the presented method is fast, reliable, and achieves high quality layouts.

» Show BibTeX

@article{Lyon:2021:Quad,

title = {Quad Layouts via Constrained T-Mesh Quantization},

author = {Lyon, Max and Campen, Marcel and Kobbelt, Leif},

journal = {Computer Graphics Forum},

volume = {40},

number = {2},

year = {2021}

}

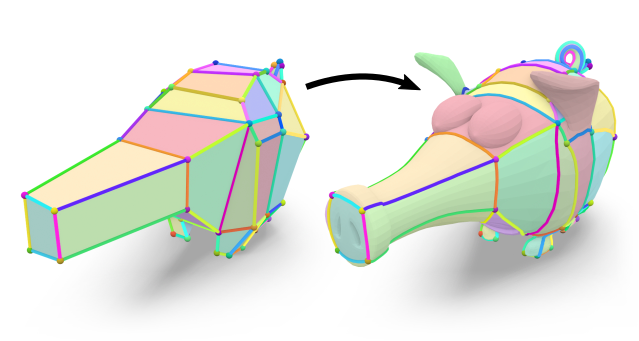

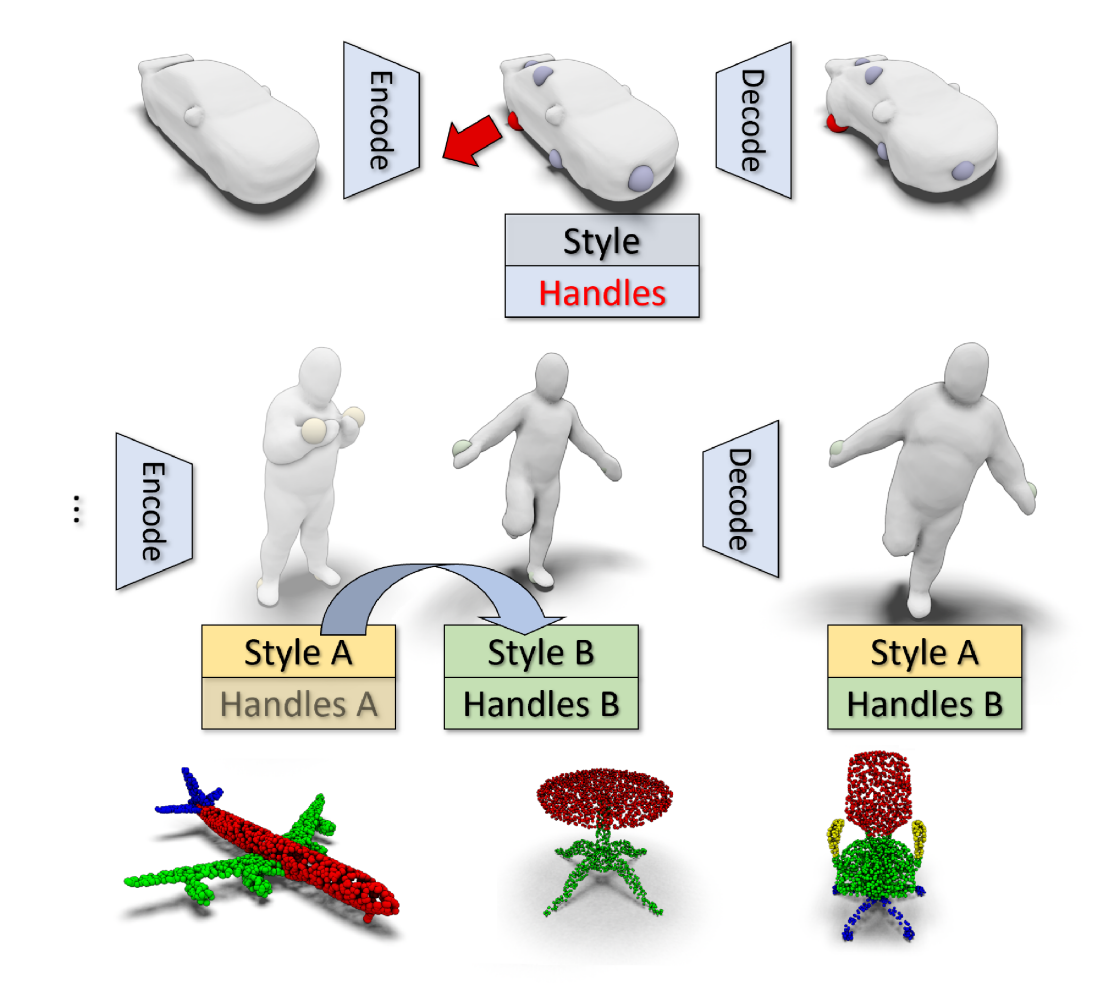

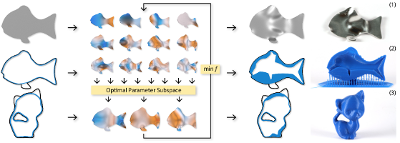

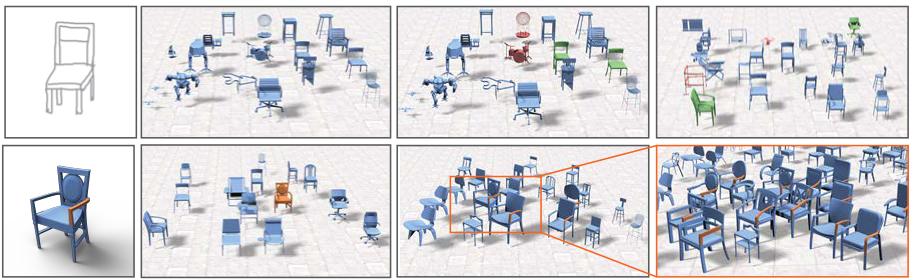

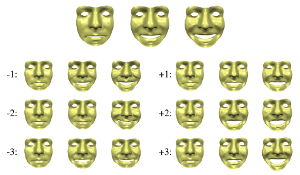

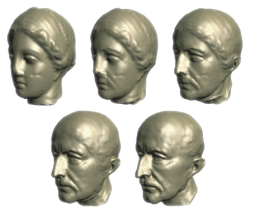

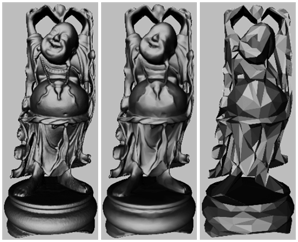

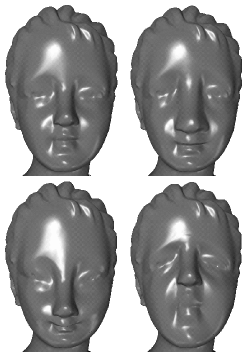

Intuitive Shape Editing in Latent Space

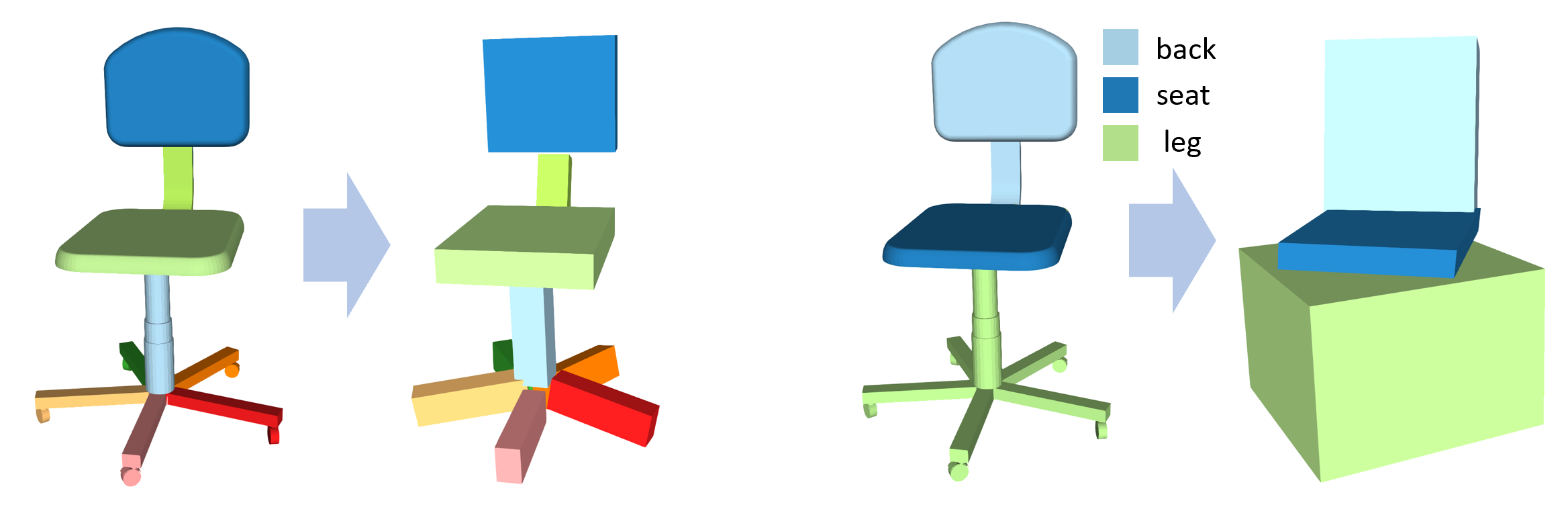

The use of autoencoders for shape editing or generation through latent space manipulation suffers from unpredictable changes in the output shape. Our autoencoder-based method enables intuitive shape editing in latent space by disentangling latent sub-spaces into style variables and control points on the surface that can be manipulated independently. The key idea is adding a Lipschitz-type constraint to the loss function, i.e. bounding the change of the output shape proportionally to the change in latent space, leading to interpretable latent space representations. The control points on the surface that are part of the latent code of an object can then be freely moved, allowing for intuitive shape editing directly in latent space. We evaluate our method by comparing to state-of-the-art data-driven shape editing methods. We further demonstrate the expressiveness of our learned latent space by leveraging it for unsupervised part segmentation.

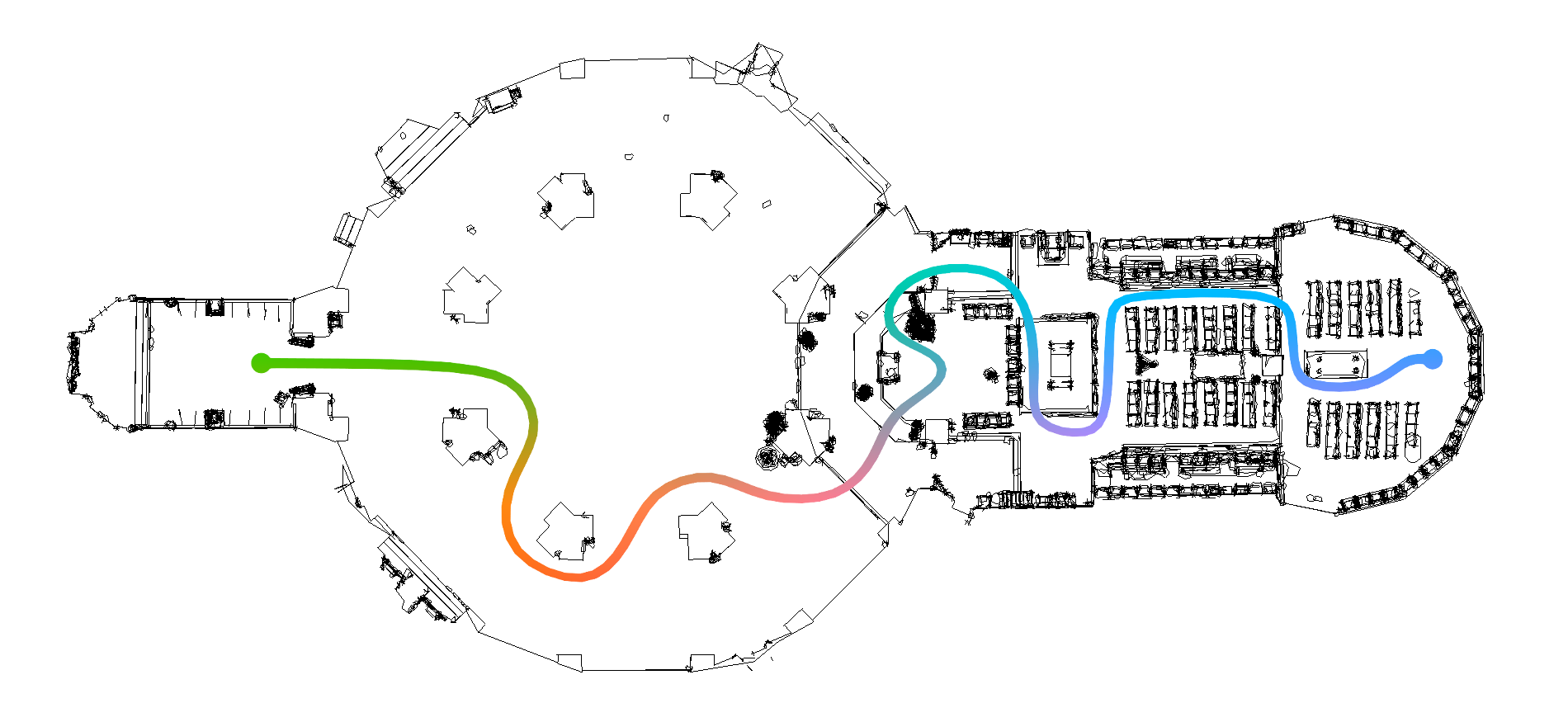

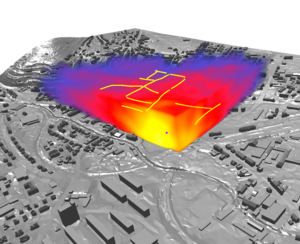

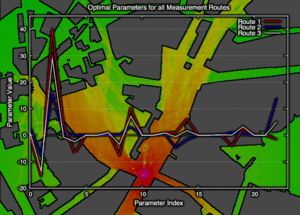

Highly accurate digital traffic recording as a basis for future mobility research: Methods and concepts of the research project HDV-Mess

The research project HDV-Mess aims at a currently missing, but very crucial component for addressing important challenges in the field of connected and automated driving on public roads. The goal is to record traffic events at various relevant locations with high accuracy and to collect real traffic data as a basis for the development and validation of current and future sensor technologies as well as automated driving functions. For this purpose, it is necessary to develop a concept for a mobile modular system of measuring stations for highly accurate traffic data acquisition, which enables a temporary installation of a sensor and communication infrastructure at different locations. Within this paper, we first discuss the project goals before we present our traffic detection concept using mobile modular intelligent transport systems stations (ITS-Ss). We then explain the approaches for data processing of sensor raw data to refined trajectories, data communication, and data validation.

@article{DBLP:journals/corr/abs-2106-04175,

author = {Laurent Kloeker and

Fabian Thomsen and

Lutz Eckstein and

Philip Trettner and

Tim Elsner and

Julius Nehring{-}Wirxel and

Kersten Schuster and

Leif Kobbelt and

Michael Hoesch},

title = {Highly accurate digital traffic recording as a basis for future mobility

research: Methods and concepts of the research project HDV-Mess},

journal = {CoRR},

volume = {abs/2106.04175},

year = {2021},

url = {https://arxiv.org/abs/2106.04175},

eprinttype = {arXiv},

eprint = {2106.04175},

timestamp = {Fri, 11 Jun 2021 11:04:16 +0200},

biburl = {https://dblp.org/rec/journals/corr/abs-2106-04175.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}

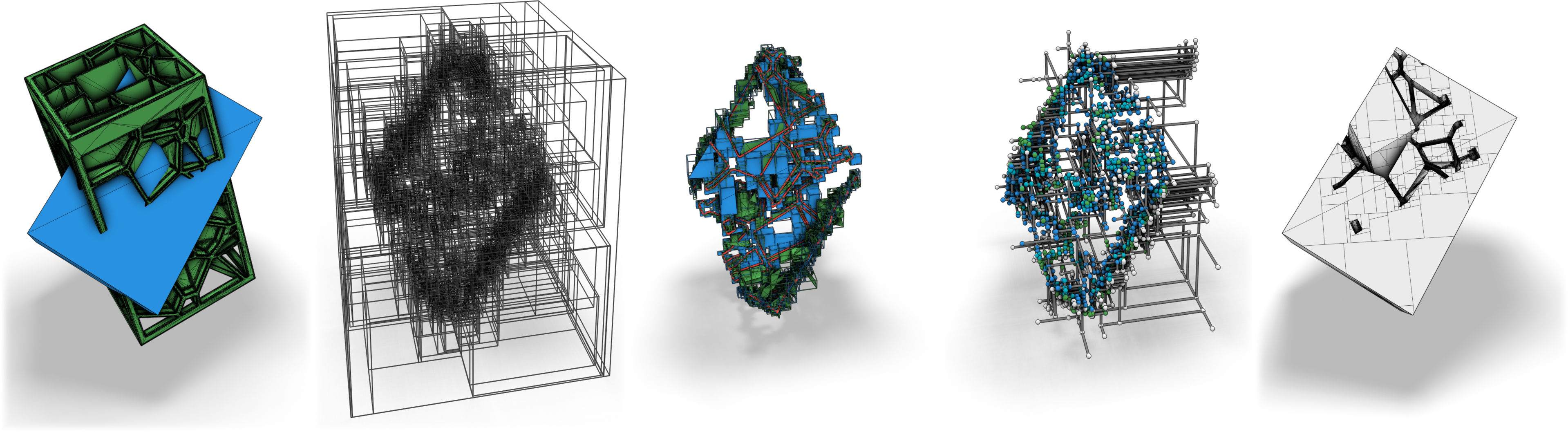

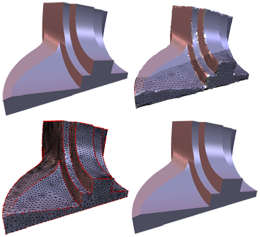

Fast Exact Booleans for Iterated CSG using Octree-Embedded BSPs

We present octree-embedded BSPs, a volumetric mesh data structure suited for performing a sequence of Boolean operations (iterated CSG) efficiently. At its core, our data structure leverages a plane-based geometry representation and integer arithmetics to guarantee unconditionally robust operations. These typically present considerable performance challenges which we overcome by using custom-tailored fixed-precision operations and an efficient algorithm for cutting a convex mesh against a plane. Consequently, BSP Booleans and mesh extraction are formulated in terms of mesh cutting. The octree is used as a global acceleration structure to keep modifications local and bound the BSP complexity. With our optimizations, we can perform up to 2.5 million mesh-plane cuts per second on a single core, which creates roughly 40-50 million output BSP nodes for CSG. We demonstrate our system in two iterated CSG settings: sweep volumes and a milling simulation.

@article{NEHRINGWIRXEL2021103015,

title = {Fast Exact Booleans for Iterated CSG using Octree-Embedded BSPs},

journal = {Computer-Aided Design},

volume = {135},

pages = {103015},

year = {2021},

issn = {0010-4485},

doi = {https://doi.org/10.1016/j.cad.2021.103015},

url = {https://www.sciencedirect.com/science/article/pii/S0010448521000269},

author = {Julius Nehring-Wirxel and Philip Trettner and Leif Kobbelt},

keywords = {Plane-based geometry, CSG, Mesh Booleans, BSP, Octree, Integer arithmetic},

abstract = {We present octree-embedded BSPs, a volumetric mesh data structure suited for performing a sequence of Boolean operations (iterated CSG) efficiently. At its core, our data structure leverages a plane-based geometry representation and integer arithmetics to guarantee unconditionally robust operations. These typically present considerable performance challenges which we overcome by using custom-tailored fixed-precision operations and an efficient algorithm for cutting a convex mesh against a plane. Consequently, BSP Booleans and mesh extraction are formulated in terms of mesh cutting. The octree is used as a global acceleration structure to keep modifications local and bound the BSP complexity. With our optimizations, we can perform up to 2.5 million mesh-plane cuts per second on a single core, which creates roughly 40-50 million output BSP nodes for CSG. We demonstrate our system in two iterated CSG settings: sweep volumes and a milling simulation.}

}

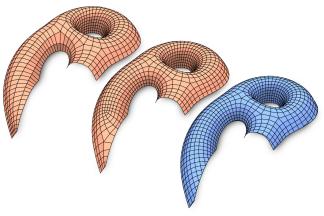

Inter-Surface Maps via Constant-Curvature Metrics

We propose a novel approach to represent maps between two discrete surfaces of the same genus and to minimize intrinsic mapping distortion. Our maps are well-defined at every surface point and are guaranteed to be continuous bijections (surface homeomorphisms). As a key feature of our approach, only the images of vertices need to be represented explicitly, since the images of all other points (on edges or in faces) are properly defined implicitly. This definition is via unique geodesics in metrics of constant Gaussian curvature. Our method is built upon the fact that such metrics exist on surfaces of arbitrary topology, without the need for any cuts or cones (as asserted by the uniformization theorem). Depending on the surfaces' genus, these metrics exhibit one of the three classical geometries: Euclidean, spherical or hyperbolic. Our formulation handles constructions in all three geometries in a unified way. In addition, by considering not only the vertex images but also the discrete metric as degrees of freedom, our formulation enables us to simultaneously optimize the images of these vertices and images of all other points.

» Show BibTeX

@article{schmidt2020intersurface,

author = {Schmidt, Patrick and Campen, Marcel and Born, Janis and Kobbelt, Leif},

title = {Inter-Surface Maps via Constant-Curvature Metrics},

journal = {ACM Transactions on Graphics},

issue_date = {July 2020},

volume = {39},

number = {4},

month = jul,

year = {2020},

articleno = {119},

url = {https://doi.org/10.1145/3386569.3392399},

doi = {10.1145/3386569.3392399},

publisher = {ACM},

address = {New York, NY, USA},

}

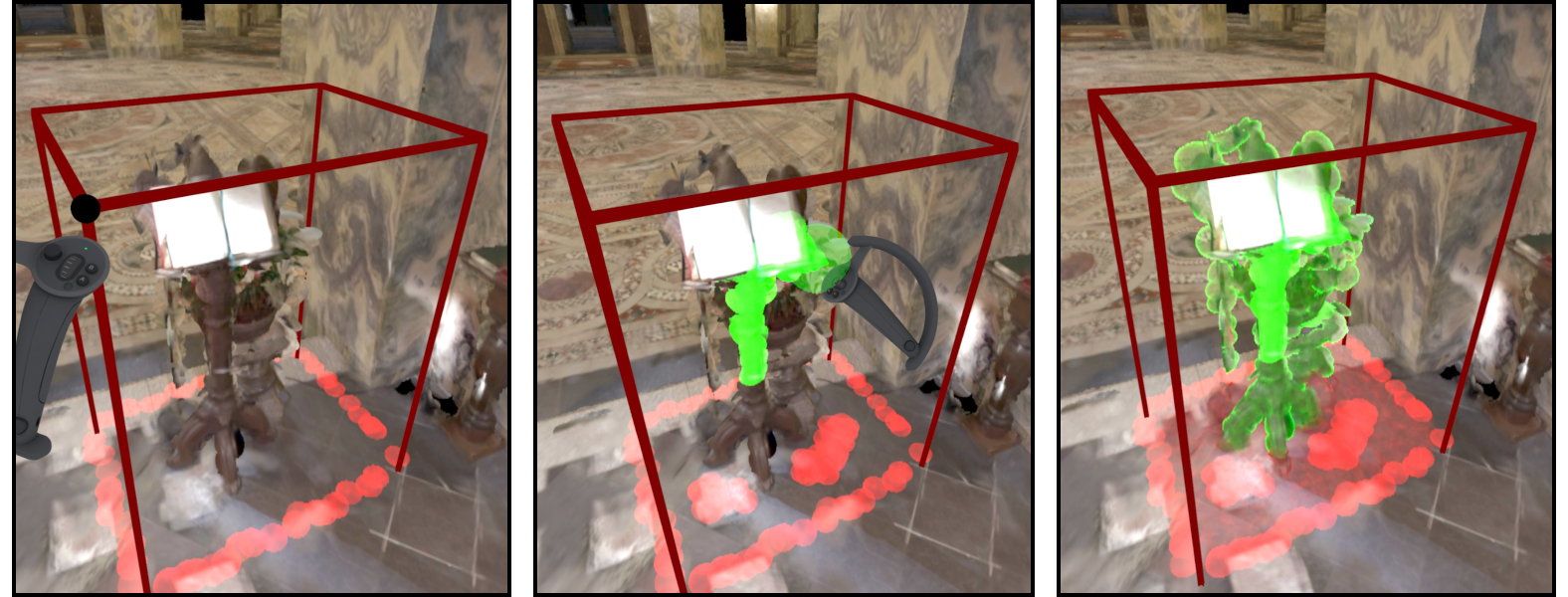

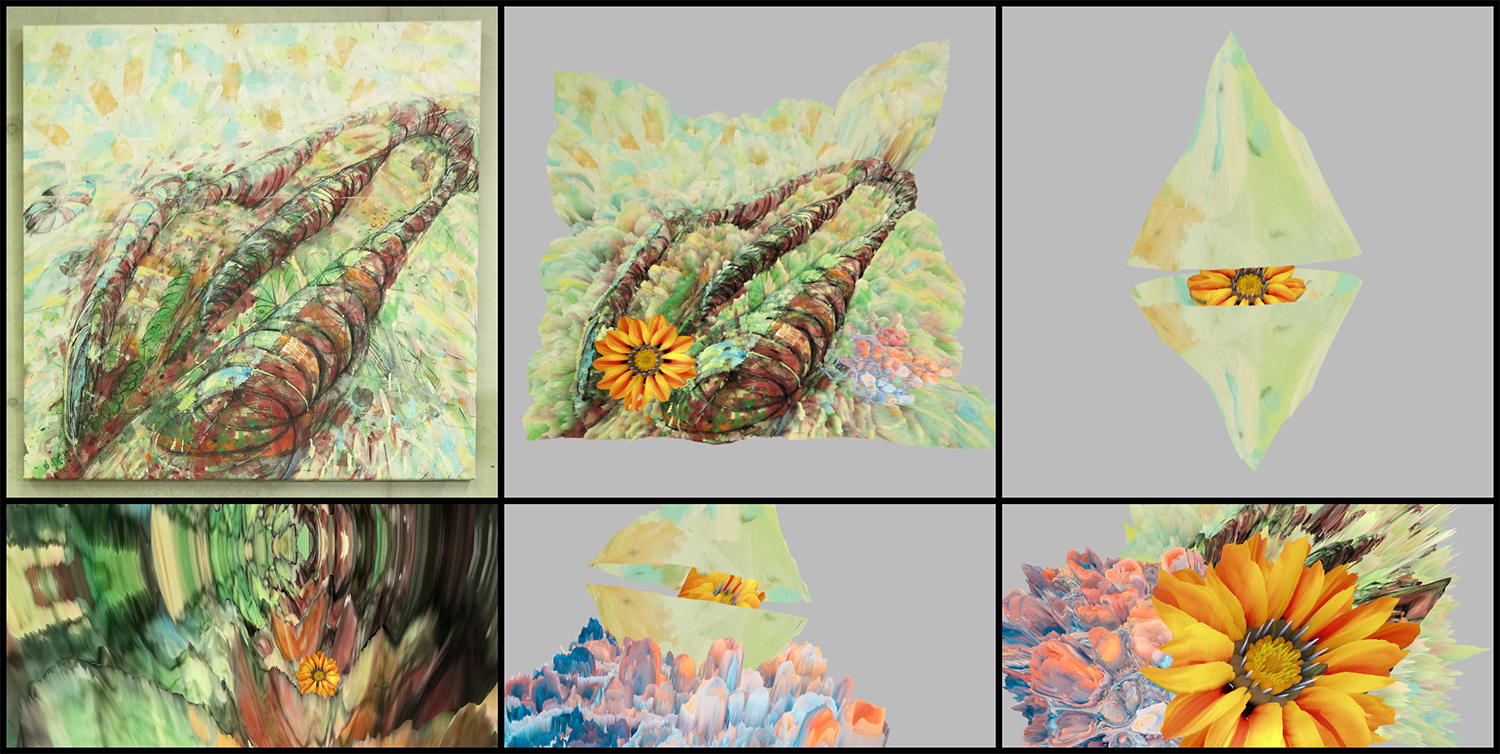

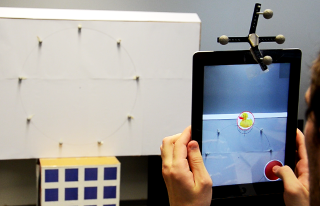

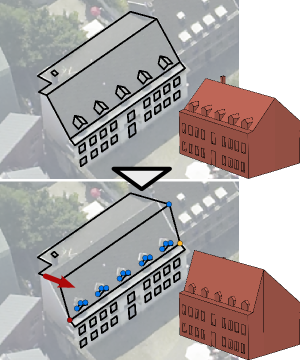

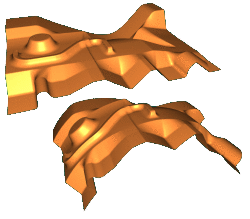

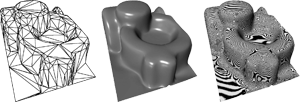

Rilievo: Artistic Scene Authoring via Interactive Height Map Extrusion in VR

The authors present a virtual authoring environment for artistic creation in VR. It enables the effortless conversion of 2D images into volumetric 3D objects. Artistic elements in the input material are extracted with a convenient VR-based segmentation tool. Relief sculpting is then performed by interactively mixing different height maps. These are automatically generated from the input image structure and appearance. A prototype of the tool is showcased in an analog-virtual artistic workflow in collaboration with a traditional painter. It combines the expressiveness of analog painting and sculpting with the creative freedom of spatial arrangement in VR.

@article{eroglu2020rilievo,

title={Rilievo: Artistic Scene Authoring via Interactive Height Map Extrusion in VR},

author={Eroglu, Sevinc and Schmitz, Patric and Martinez, Carlos Aguilera and Rusch, Jana and Kobbelt, Leif and Kuhlen, Torsten W},

journal={Leonardo},

volume={53},

number={4},

pages={438--441},

year={2020},

publisher={MIT Press}

}

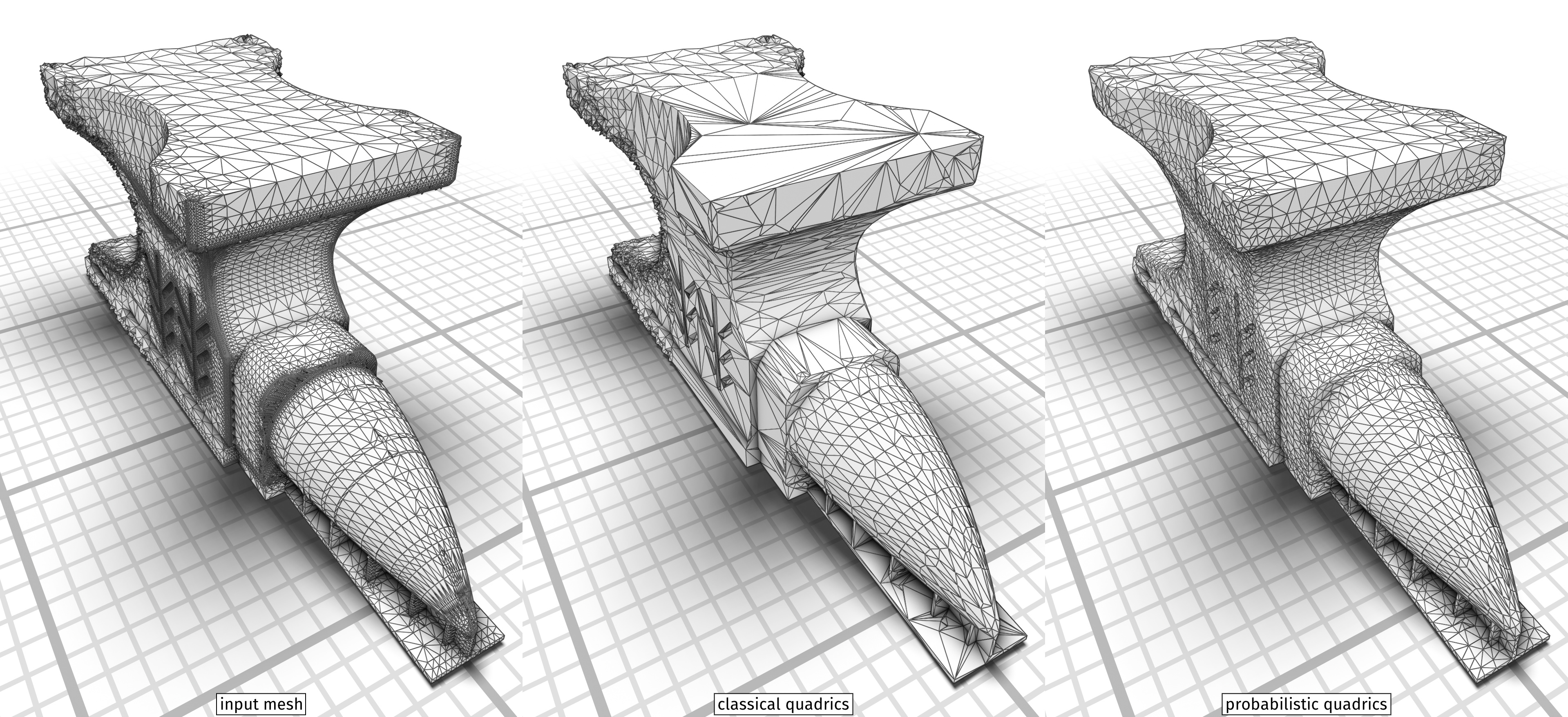

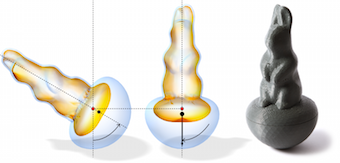

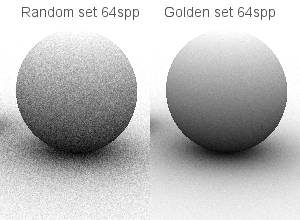

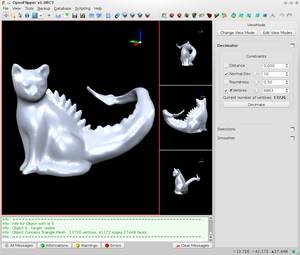

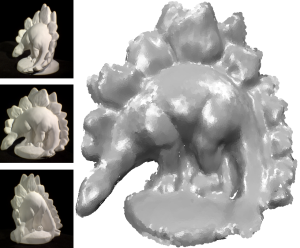

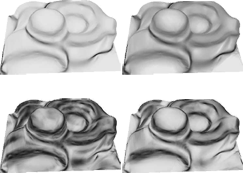

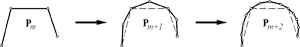

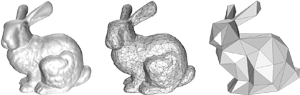

Fast and Robust QEF Minimization using Probabilistic Quadrics

Error quadrics are a fundamental and powerful building block in many geometry processing algorithms. However, finding the minimizer of a given quadric is in many cases not robust and requires a singular value decomposition or some ad-hoc regularization. While classical error quadrics measure the squared deviation from a set of ground truth planes or polygons, we treat the input data as genuinely uncertain information and embed error quadrics in a probabilistic setting ("probabilistic quadrics") where the optimal point minimizes the expected squared error. We derive closed form solutions for the popular plane and triangle quadrics subject to (spatially varying, anisotropic) Gaussian noise. Probabilistic quadrics can be minimized robustly by solving a simple linear system - 50x faster than SVD. We show that probabilistic quadrics have superior properties in tasks like decimation and isosurface extraction since they favor more uniform triangulations and are more tolerant to noise while still maintaining feature sensitivity. A broad spectrum of applications can directly benefit from our new quadrics as a drop-in replacement which we demonstrate with mesh smoothing via filtered quadrics and non-linear subdivision surfaces.

@article {10.1111:cgf.13933,

journal = {Computer Graphics Forum},

title = {{Fast and Robust QEF Minimization using Probabilistic Quadrics}},

author = {Trettner, Philip and Kobbelt, Leif},

year = {2020},

publisher = {The Eurographics Association and John Wiley & Sons Ltd.},

ISSN = {1467-8659},

DOI = {10.1111/cgf.13933}

}

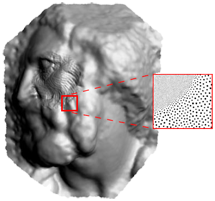

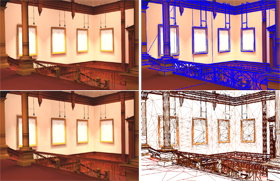

High-Fidelity Point-Based Rendering of Large-Scale 3D Scan Datasets

Digitalization of 3D objects and scenes using modern depth sensors and high-resolution RGB cameras enables the preservation of human cultural artifacts at an unprecedented level of detail. Interactive visualization of these large datasets, however, is challenging without degradation in visual fidelity. A common solution is to fit the dataset into available video memory by downsampling and compression. The achievable reproduction accuracy is thereby limited for interactive scenarios, such as immersive exploration in Virtual Reality (VR). This degradation in visual realism ultimately hinders the effective communication of human cultural knowledge. This article presents a method to render 3D scan datasets with minimal loss of visual fidelity. A point-based rendering approach visualizes scan data as a dense splat cloud. For improved surface approximation of thin and sparsely sampled objects, we propose oriented 3D ellipsoids as rendering primitives. To render massive texture datasets, we present a virtual texturing system that dynamically loads required image data. It is paired with a single-pass page prediction method that minimizes visible texturing artifacts. Our system renders a challenging dataset in the order of 70 million points and a texture size of 1.2 terabytes consistently at 90 frames per second in stereoscopic VR.

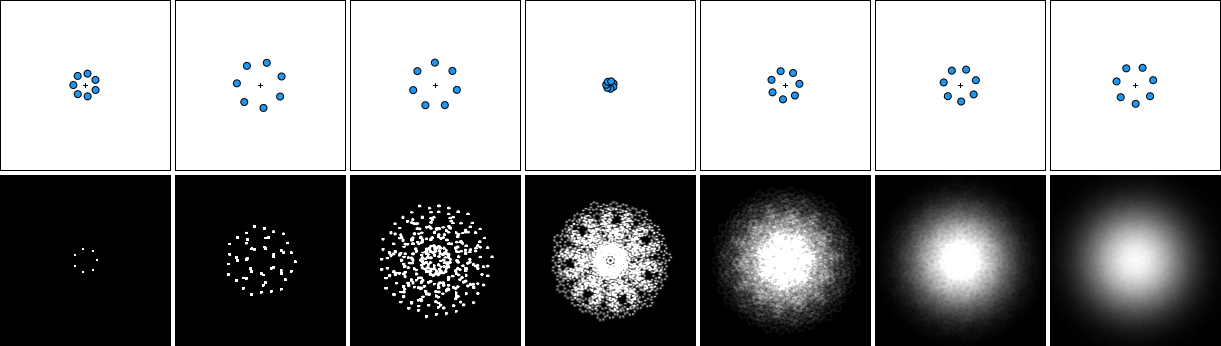

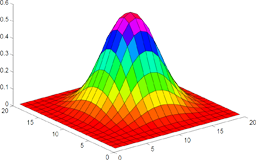

High-Performance Image Filters via Sparse Approximations

We present a numerical optimization method to find highly efficient (sparse) approximations for convolutional image filters. Using a modified parallel tempering approach, we solve a constrained optimization that maximizes approximation quality while strictly staying within a user-prescribed performance budget. The results are multi-pass filters where each pass computes a weighted sum of bilinearly interpolated sparse image samples, exploiting hardware acceleration on the GPU. We systematically decompose the target filter into a series of sparse convolutions, trying to find good trade-offs between approximation quality and performance. Since our sparse filters are linear and translation-invariant, they do not exhibit the aliasing and temporal coherence issues that often appear in filters working on image pyramids. We show several applications, ranging from simple Gaussian or box blurs to the emulation of sophisticated Bokeh effects with user-provided masks. Our filters achieve high performance as well as high quality, often providing significant speed-up at acceptable quality even for separable filters. The optimized filters can be baked into shaders and used as a drop-in replacement for filtering tasks in image processing or rendering pipelines.

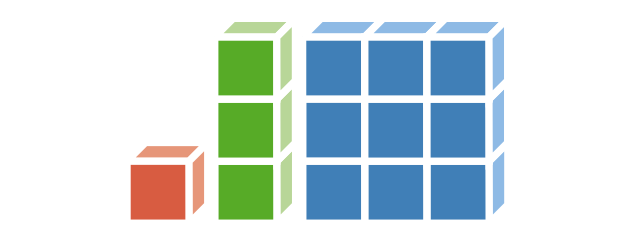

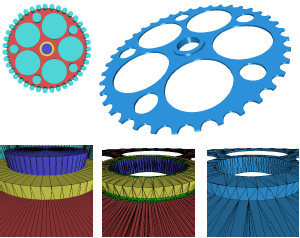

Cost Minimizing Local Anisotropic Quad Mesh Refinement

Quad meshes as a surface representation have many conceptual advantages over triangle meshes. Their edges can naturally be aligned to principal curvatures of the underlying surface and they have the flexibility to create strongly anisotropic cells without causing excessively small inner angles. While in recent years a lot of progress has been made towards generating high quality uniform quad meshes for arbitrary shapes, their adaptive and anisotropic refinement remains difficult since a single edge split might propagate across the entire surface in order to maintain consistency. In this paper we present a novel refinement technique which finds the optimal trade-off between number of resulting elements and inserted singularities according to a user prescribed weighting. Our algorithm takes as input a quad mesh with those edges tagged that are prescribed to be refined. It then formulates a binary optimization problem that minimizes the number of additional edges which need to be split in order to maintain consistency. Valence 3 and 5 singularities have to be introduced in the transition region between refined and unrefined regions of the mesh. The optimization hence computes the optimal trade-off and places singularities strategically in order to minimize the number of consistency splits — or avoids singularities where this causes only a small number of additional splits. When applying the refinement scheme iteratively, we extend our binary optimization formulation such that previous splits can be undone if this prevents degenerate cells with small inner angles that otherwise might occur in anisotropic regions or in the vicinity of singularities. We demonstrate on a number of challenging examples that the algorithm performs well in practice.

» Show BibTeX

@article{Lyon:2020:Cost,

title = {Cost Minimizing Local Anisotropic Quad Mesh Refinement},

author = {Lyon, Max and Bommes, David and Kobbelt, Leif},

journal = {Computer Graphics Forum},

volume = {39},

number = {5},

year = {2020},

doi = {10.1111/cgf.14076}

}

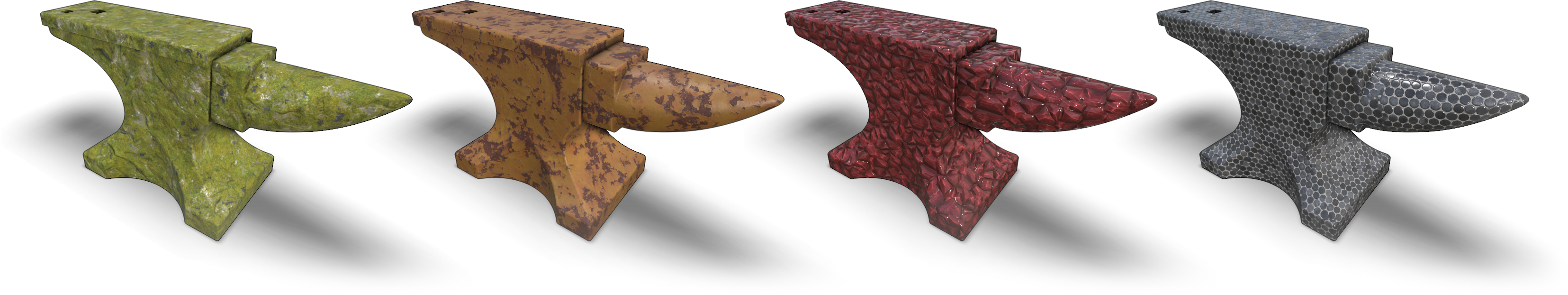

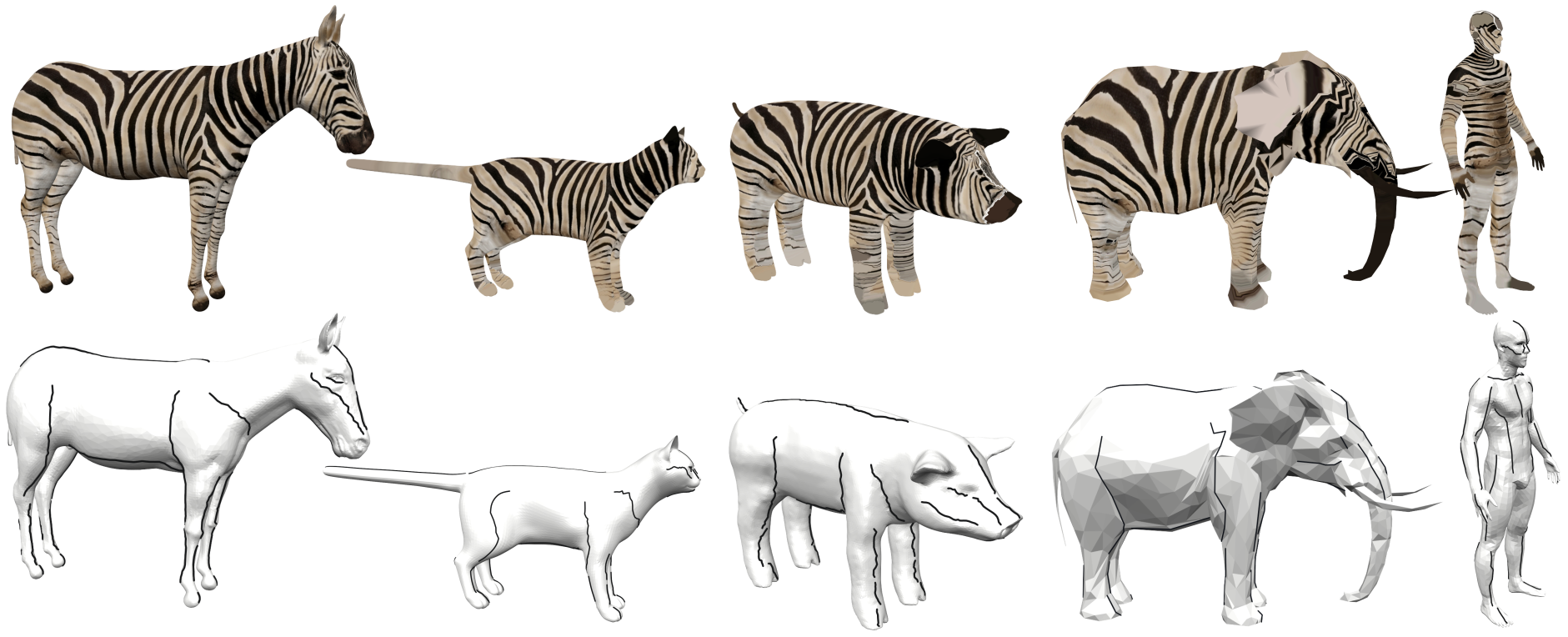

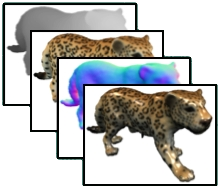

A Three-Level Approach to Texture Mapping and Synthesis on 3D Surfaces

We present a method for example-based texturing of triangular 3D meshes. Our algorithm maps a small 2D texture sample onto objects of arbitrary size in a seamless fashion, with no visible repetitions and low overall distortion. It requires minimal user interaction and can be applied to complex, multi-layered input materials that are not required to be tileable. Our framework integrates a patch-based approach with per-pixel compositing. To minimize visual artifacts, we run a three-level optimization that starts with a rigid alignment of texture patches (macro scale), then continues with non-rigid adjustments (meso scale) and finally performs pixel-level texture blending (micro scale). We demonstrate that the relevance of the three levels depends on the texture content and type (stochastic, structured, or anisotropic textures).

@article{schuster2020,

author = {Schuster, Kersten and Trettner, Philip and Schmitz, Patric and Kobbelt, Leif},

title = {A Three-Level Approach to Texture Mapping and Synthesis on 3D Surfaces},

year = {2020},

issue_date = {Apr 2020},

publisher = {The Association for Computers in Mathematics and Science Teaching},

address = {USA},

volume = {3},

number = {1},

url = {https://doi.org/10.1145/3384542},

doi = {10.1145/3384542},

journal = {Proc. ACM Comput. Graph. Interact. Tech.},

month = apr,

articleno = {1},

numpages = {19},

keywords = {material blending, surface texture synthesis, texture mapping}

}

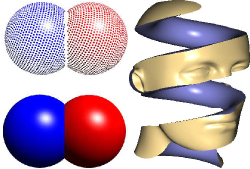

PRS-Net: Planar Reflective Symmetry Detection Net for 3D Models

In geometry processing, symmetry is a universal type of high-level structural information of 3D models and benefits many geometry processing tasks including shape segmentation, alignment, matching, and completion. Thus it is an important problem to analyze various symmetry forms of 3D shapes. Planar reflective symmetry is the most fundamental one. Traditional methods based on spatial sampling can be time-consuming and may not be able to identify all the symmetry planes. In this paper, we present a novel learning framework to automatically discover global planar reflective symmetry of a 3D shape. Our framework trains an unsupervised 3D convolutional neural network to extract global model features and then outputs possible global symmetry parameters, where input shapes are represented using voxels. We introduce a dedicated symmetry distance loss along with a regularization loss to avoid generating duplicated symmetry planes. Our network can also identify generalized cylinders by predicting their rotation axes. We further provide a method to remove invalid and duplicated planes and axes. We demonstrate that our method is able to produce reliable and accurate results. Our neural network based method is hundreds of times faster than the state-of-the-art methods, which are based on sampling. Our method is also robust even with noisy or incomplete input surfaces.

@article{abs-1910-06511,

author = {Lin Gao and

Ling{-}Xiao Zhang and

Hsien{-}Yu Meng and

Yi{-}Hui Ren and

Yu{-}Kun Lai and

Leif Kobbelt},

title = {PRS-Net: Planar Reflective Symmetry Detection Net for 3D Models},

journal = {CoRR},

volume = {abs/1910.06511},

year = {2019},

url = {http://arxiv.org/abs/1910.06511},

archivePrefix = {arXiv},

eprint = {1910.06511},

}

Unsupervised Segmentation of Indoor 3D Point Cloud: Application to Object-based Classification

Point cloud data of indoor scenes is primarily composed of planar-dominant elements. Automatic shape segmentation is thus valuable to avoid labour intensive labelling. This paper provides a fully unsupervised region growing segmentation approach for efficient clustering of massive 3D point clouds. Our contribution targets a low-level grouping beneficial to object-based classification. We argue that the use of relevant segments for object-based classification has the potential to perform better in terms of recognition accuracy, computing time and lowers the manual labelling time needed. However, fully unsupervised approaches are rare due to a lack of proper generalisation of user-defined parameters. We propose a self-learning heuristic process to define optimal parameters, and we validate our method on a large and richly annotated dataset (S3DIS) yielding 88.1% average F1-score for object-based classification. It permits to automatically segment indoor point clouds with no prior knowledge at commercially viable performance and is the foundation for efficient indoor 3D modelling in cluttered point clouds.

@Article{poux2020b,

author = {Poux, F. and Mattes, C. and Kobbelt, L.},

title = {UNSUPERVISED SEGMENTATION OF INDOOR 3D POINT CLOUD: APPLICATION TO OBJECT-BASED CLASSIFICATION},

journal = {ISPRS - International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences},

volume = {XLIV-4/W1-2020},

year = {2020},

pages = {111--118},

url = {https://www.int-arch-photogramm-remote-sens-spatial-inf-sci.net/XLIV-4-W1-2020/111/2020/},

doi = {10.5194/isprs-archives-XLIV-4-W1-2020-111-2020}

}

Initial User-Centered Design of a Virtual Reality Heritage System: Applications for Digital Tourism

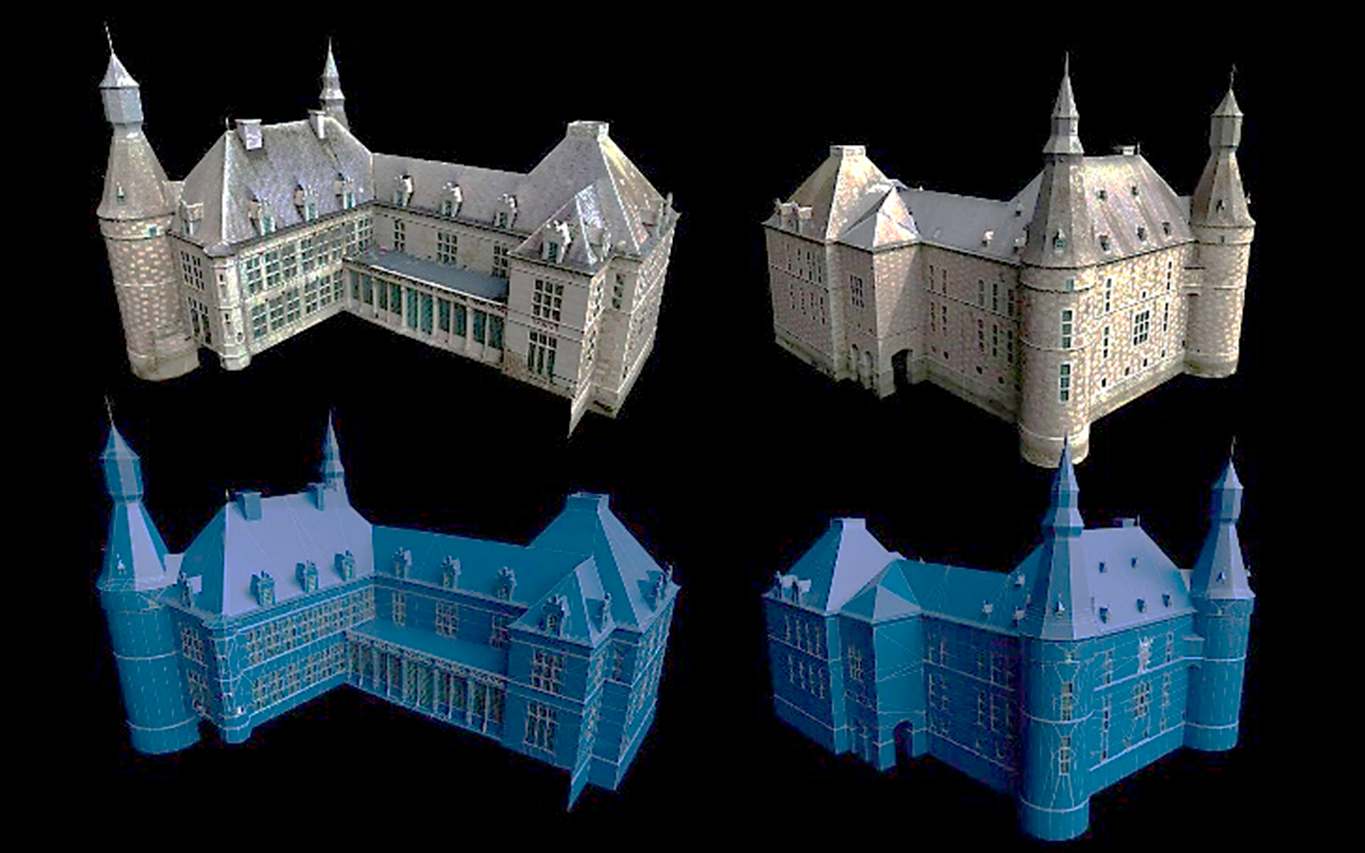

Reality capture allows for the reconstruction, with a high accuracy, of the physical reality of cultural heritage sites. Obtained 3D models are often used for various applications such as promotional content creation, virtual tours, and immersive experiences. In this paper, we study new ways to interact with these high-quality 3D reconstructions in a real-world scenario. We propose a user-centric product design to create a virtual reality (VR) application specifically intended for multi-modal purposes. It is applied to the castle of Jehay (Belgium), which is under renovation, to permit multi-user digital immersive experiences. The article proposes a high-level view of multi-disciplinary processes, from a needs analysis to the 3D reality capture workflow and the creation of a VR environment incorporated into an immersive application. We provide several relevant VR parameters for the scene optimization, the locomotion system, and the multi-user environment definition that were tested in a heritage tourism context.

@article{poux2020a,

title={Initial User-Centered Design of a Virtual Reality Heritage System: Applications for Digital Tourism},

volume={12},

ISSN={2072-4292},

url={http://dx.doi.org/10.3390/rs12162583},

DOI={10.3390/rs12162583},

number={16},

journal={Remote Sensing},

publisher={MDPI AG},

author={Poux, Florent and Valembois, Quentin and Mattes, Christian and Kobbelt, Leif and Billen, Roland},

year={2020},

month={Aug},

pages={2583}

}

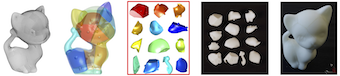

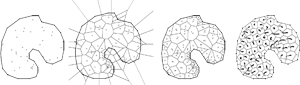

SEG-MAT: 3D Shape Segmentation Using Medial Axis Transform

Segmenting arbitrary 3D objects into constituent parts that are structurally meaningful is a fundamental problem encountered in a wide range of computer graphics applications. Existing methods for 3D shape segmentation suffer from complex geometry processing and heavy computation caused by using low-level features and fragmented segmentation results due to the lack of global consideration. We present an efficient method, called SEG-MAT, based on the medial axis transform (MAT) of the input shape. Specifically, with the rich geometrical and structural information encoded in the MAT, we are able to develop a simple and principled approach to effectively identify the various types of junctions between different parts of a 3D shape. Extensive evaluations and comparisons show that our method outperforms the state-of-the-art methods in terms of segmentation quality and is also one order of magnitude faster.

@ARTICLE{9234096,

author={C. {Lin} and L. {Liu} and C. {Li} and L. {Kobbelt} and B. {Wang} and S. {Xin} and W. {Wang}},

journal={IEEE Transactions on Visualization and Computer Graphics},

title={SEG-MAT: 3D Shape Segmentation Using Medial Axis Transform},

year={2020},

volume={},

number={},

pages={1-1},

doi={10.1109/TVCG.2020.3032566}}

Parametrization Quantization with Free Boundaries for Trimmed Quad Meshing

The generation of quad meshes based on surface parametrization techniques has proven to be a versatile approach. These techniques quantize an initial seamless parametrization so as to obtain an integer grid map implying a pure quad mesh. State-of-the-art methods following this approach have to assume that the surface to be meshed either has no boundary, or has a boundary which the resulting mesh is supposed to be aligned to. In a variety of applications this is not desirable and non-boundary-aligned meshes or grid-parametrizations are preferred. We thus present a technique to robustly generate integer grid maps which are either boundary-aligned, non-boundary-aligned, or partially boundary-aligned, just as required by different applications. We thereby generalize previous work to this broader setting. This enables the reliable generation of trimmed quad meshes with partial elements along the boundary, preferable in various scenarios, from tiled texturing over design and modeling to fabrication and architecture, due to fewer constraints and hence higher overall mesh quality and other benefits in terms of aesthetics and flexibility.

@article{Lyon:2019:TrimmedQuadMeshing,

author = "Lyon, Max and Campen, Marcel and Bommes, David and Kobbelt, Leif",

title = "Parametrization Quantization with Free Boundaries for Trimmed Quad Meshing",

journal = "ACM Transactions on Graphics",

volume = 38,

number = 4,

year = 2019

}

Distortion-Minimizing Injective Maps Between Surfaces

The problem of discrete surface parametrization, i.e. mapping a mesh to a planar domain, has been investigated extensively. We address the more general problem of mapping between surfaces. In particular, we provide a formulation that yields a map between two disk-topology meshes, which is continuous and injective by construction and which locally minimizes intrinsic distortion. A common approach is to express such a map as the composition of two maps via a simple intermediate domain such as the plane, and to independently optimize the individual maps. However, even if both individual maps are of minimal distortion, there is potentially high distortion in the composed map. In contrast to many previous works, we minimize distortion in an end-to-end manner, directly optimizing the quality of the composed map. This setting poses additional challenges due to the discrete nature of both the source and the target domain. We propose a formulation that, despite the combinatorial aspects of the problem, allows for a purely continuous optimization. Further, our approach addresses the non-smooth nature of discrete distortion measures in this context which hinders straightforward application of off-the-shelf optimization techniques. We demonstrate that, despite the challenges inherent to the more involved setting, discrete surface-to-surface maps can be optimized effectively.

» Show BibTeX

@article{schmidt2019distortion,

author = {Schmidt, Patrick and Born, Janis and Campen, Marcel and Kobbelt, Leif},

title = {Distortion-Minimizing Injective Maps Between Surfaces},

journal = {ACM Transactions on Graphics},

issue_date = {November 2019},

volume = {38},

number = {6},

month = nov,

year = {2019},

articleno = {156},

url = {https://doi.org/10.1145/3355089.3356519},

doi = {10.1145/3355089.3356519},

publisher = {ACM},

address = {New York, NY, USA},

}

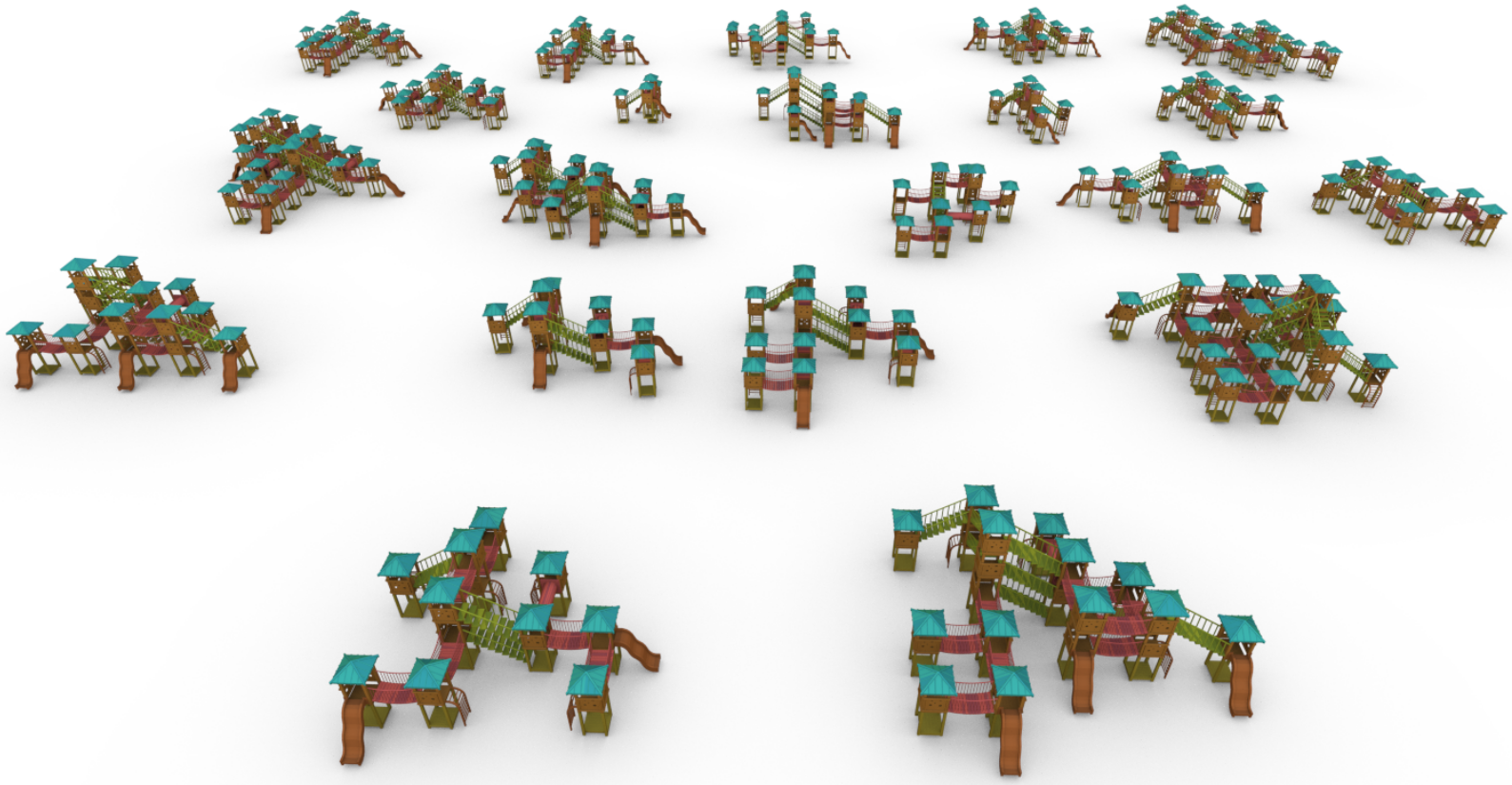

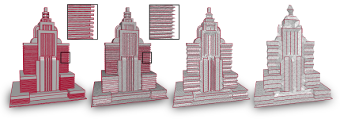

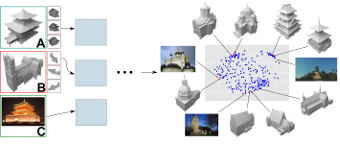

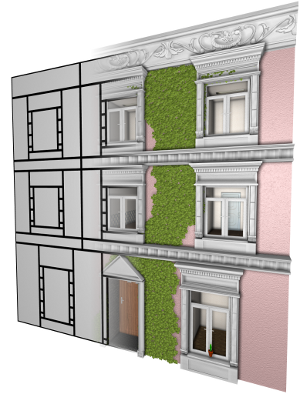

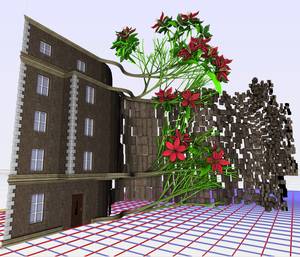

String-Based Synthesis of Structured Shapes

We propose a novel method to synthesize geometric models from a given class of context-aware structured shapes such as buildings and other man-made objects. Our central idea is to leverage powerful machine learning methods from the area of natural language processing for this task. To this end, we propose a technique that maps shapes to strings and vice versa, through an intermediate shape graph representation. We then convert procedurally generated shape repositories into text databases that in turn can be used to train a variational autoencoder which enables higher level shape manipulation and synthesis like, e.g., interpolation and sampling via its continuous latent space.

@article{Kalojanov2019,

journal = {Computer Graphics Forum},

title = {{String-Based Synthesis of Structured Shapes}},

author = {Javor Kalojanov and Isaak Lim and Niloy Mitra and Leif Kobbelt},

pages = {027-036},

volume= {38},

number= {2},

year = {2019},

note = {\URL{https://diglib.eg.org/bitstream/handle/10.1111/cgf13616/v38i2pp027-036.pdf}},

DOI = {10.1111/cgf.13616},

}

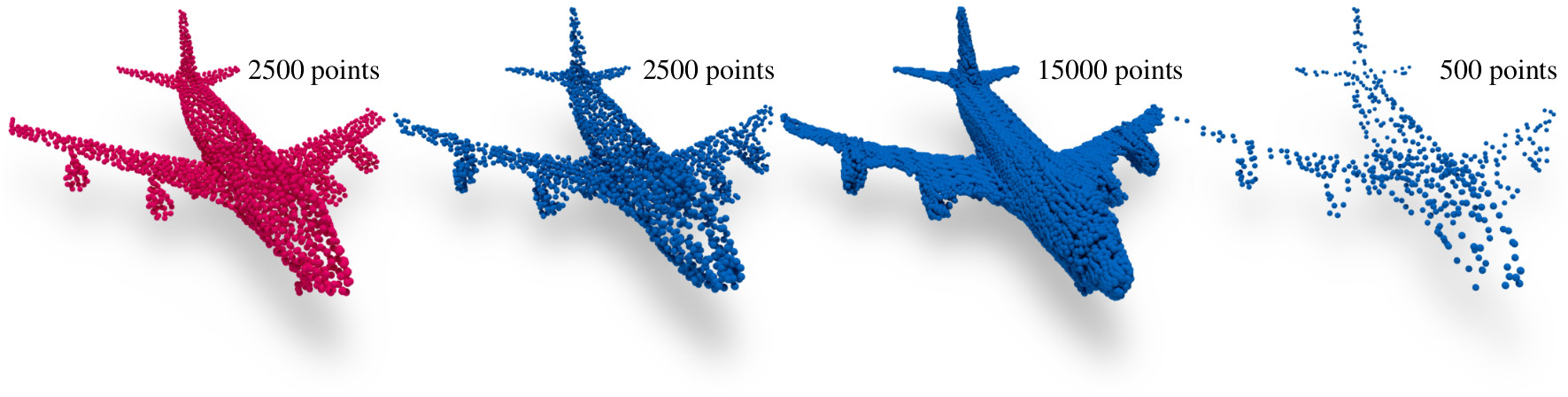

A Convolutional Decoder for Point Clouds using Adaptive Instance Normalization

Automatic synthesis of high quality 3D shapes is an ongoing and challenging area of research. While several data-driven methods have been proposed that make use of neural networks to generate 3D shapes, none of them reach the level of quality that deep learning synthesis approaches for images provide. In this work we present a method for a convolutional point cloud decoder/generator that makes use of recent advances in the domain of image synthesis. Namely, we use Adaptive Instance Normalization and offer an intuition on why it can improve training. Furthermore, we propose extensions to the minimization of the commonly used Chamfer distance for auto-encoding point clouds. In addition, we show that careful sampling is important both for the input geometry and in our point cloud generation process to improve results. The results are evaluated in an auto-encoding setup to offer both qualitative and quantitative analysis. The proposed decoder is validated by an extensive ablation study and is able to outperform current state of the art results in a number of experiments. We show the applicability of our method in the fields of point cloud upsampling, single view reconstruction, and shape synthesis.

@article{Lim:2019:ConvolutionalDecoder,

author = "Lim, Isaak and Ibing, Moritz and Kobbelt, Leif",

title = "A Convolutional Decoder for Point Clouds using Adaptive Instance Normalization",

journal = "Computer Graphics Forum",

volume = 38,

number = 5,

year = 2019

}

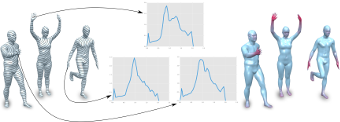

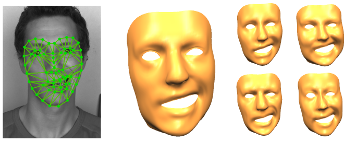

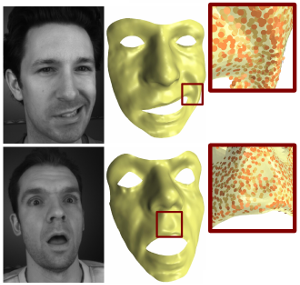

ACAP: Sparse Data Driven Mesh Deformation

Example-based mesh deformation methods are powerful tools for realistic shape editing. However, existing techniques typically combine all the example deformation modes, which can lead to overfitting, i.e. using an overly complicated model to explain the user-specified deformation. This leads to implausible or unstable deformation results, including unexpected global changes outside the region of interest. To address this fundamental limitation, we propose a sparse blending method that automatically selects a smaller number of deformation modes to compactly describe the desired deformation. This along with a suitably chosen deformation basis including spatially localized deformation modes leads to significant advantages, including more meaningful, reliable, and efficient deformations because fewer and localized deformation modes are applied. To cope with large rotations, we develop a simple but effective representation based on polar decomposition of deformation gradients, which resolves the ambiguity of large global rotations using an as-consistent-as-possible global optimization. This simple representation has a closed form solution for derivatives, making it efficient for our sparse localized representation and thus ensuring interactive performance. Experimental results show that our method outperforms state-of-the-art data-driven mesh deformation methods, for both quality of results and efficiency.

» Show BibTeX

@article{gao2019sparse,

title={Sparse data driven mesh deformation},

author={Gao, Lin and Lai, Yu-Kun and Yang, Jie and Ling-Xiao, Zhang and Xia, Shihong and Kobbelt, Leif},

journal={IEEE transactions on visualization and computer graphics},

year={2019},

publisher={IEEE}

}

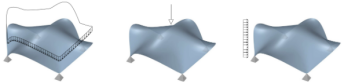

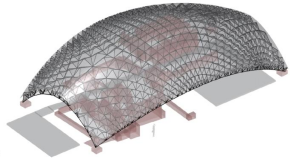

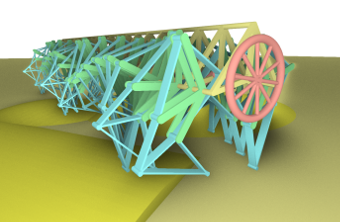

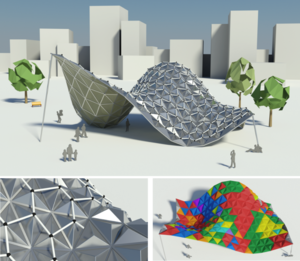

Form Finding of Stress Adapted Folding as a Lightweight Structure Under Different Load Cases

In steel construction, the use of folds is limited to longitudinal folds (e.g. trapezoidal sheets). The efficiency of creases can be increased by aligning the folding pattern to the principal stresses or to their directions. This paper presents a form-finding approach to use the material as homogeneously as possible. In addition to the purely geometric alignment according to the stress directions, it also allows the stress intensity to be taken into account during form-finding. A trajectory mesh of the principle stresses is generated on the basis of which the structure is derived. The relationships between the stress lines distance, progression and stress intensity are discussed and implemented in the approaches of form-finding. Building on this, this paper additionally deals with the question of which load case is the most effective basis for designing the crease pattern when several load cases can act simultaneously.

@article {Musto:2019:2518-6582:1,

title = "Form finding of stress adapted folding as a lightweight structure under different load cases",

journal = "Proceedings of IASS Annual Symposia",

parent_itemid = "infobike://iass/piass",

publishercode ="iass",

year = "2019",

volume = "2019",

number = "13",

publication date ="2019-10-07T00:00:00",

pages = "1-8",

itemtype = "ARTICLE",

issn = "2518-6582",

eissn = "2518-6582",

url = "https://www.ingentaconnect.com/content/iass/piass/2019/00002019/00000013/art00006",

keyword = "lightweight-construction, folding, Mixed-Integer Quadrangulation, principle stress lines",

author = "Musto, Juan and Lyon, Max and Trautz, Martin and Kobbelt, Leif",

abstract = "In steel construction, the use of folds is limited to longitudinal folds (e.g. trapezoidal sheets). The efficiency of creases can be increased by aligning the folding pattern to the principal stresses or to their directions. This paper presents a form-finding approach to use the material

as homogeneously as possible. In addition to the purely geometric alignment according to the stress directions, it also allows the stress intensity to be taken into account during form-finding. A trajectory mesh of the principle stresses is generated on the basis of which the structure is

derived. The relationships between the stress lines distance, progression and stress intensity are discussed and implemented in the approaches of form-finding. Building on this, this paper additionally deals with the question of which load case is the most effective basis for designing

the crease pattern when several load cases can act simultaneously.",

}

Beanspruchungsoptimierte Faltungen aus Stahl für selbsttragende Raumfaltwerke

Der Einsatz von Faltungen beschränkt sich im Bauwesen auf Longitudinalfaltungen (Trapezbleche) und regelmäßige Faltungen. Raumfaltwerke und Faltleichtbauplatten, räumlich gekrümmte und dreidimensionale Flächentragwerke sind Desiderate eines Leichtbaus mit Stahlblechen. Raumfaltwerke bestehen vorwiegend aus regelmäßigen Faltmuster, die auf Tesselierung mit Primitivflächen (Drei-und Vierecke) basieren. Um die Effizienz dieser Leichtbaustrukturen zu verbessern, liegt es nahe, statt regelmäßige und auf geometrischen Prinzipien basierende Faltmuster umzusetzen, Faltmuster nach Maßgabe nach Maßgabe der der Beanspruchungen bzw. der Beanspruchungsverteilung anzuwenden. Hierzu ist ein Formfindungsprozess zu entwickeln, der auf der Generierung eines Trajektoriennetzes basiert, das aus dem maßgeblichen Lastfall (formgebenden Lastfall) abgeleitet wird. Der Vergleich des Masseneinsatzes und der Traglast der Faltungen, die auf geometrischer Basis erzeugt wurden mit einer auf Basis des Trajektoriennetzes entwickelten Faltung zeigt die Veränderung der Effizienz .

@article{https://doi.org/10.1002/bate.201900024,

author = {Musto, Juan and Lyon, Max and Trautz, Martin and Kobbelt, Leif},

title = {Beanspruchungsoptimierte Faltungen aus Stahl für selbsttragende Raumfaltwerke},

journal = {Bautechnik},

volume = {96},

number = {12},

pages = {902-911},

keywords = {Leichtbau, Faltungen, Hauptspannungstrajektorien, Mixed-Integer Quadrangulation, lightweight-construction, folgings, principle stress trajectories, mixed-integer quadrangulation, Stahlbau, Leichtbau, Steel construction, lightweight construction},

doi = {https://doi.org/10.1002/bate.201900024},

url = {https://onlinelibrary.wiley.com/doi/abs/10.1002/bate.201900024},

eprint = {https://onlinelibrary.wiley.com/doi/pdf/10.1002/bate.201900024},

abstract = {Abstract Der Einsatz von Faltungen beschränkt sich im Bauwesen auf Longitudinalfaltungen (Trapezbleche) und regelmäßige Faltungen. Raumfaltwerke und Faltleichtbauplatten, räumlich gekrümmte und dreidimensionale Flächentragwerke sind Desiderate eines Leichtbaus mit Stahlblechen. Raumfaltwerke bestehen vorwiegend aus regelmäßigen Faltmustern, die auf Tesselierung mit Primitivflächen (Drei- und Vierecke) basieren. Um die Effizienz dieser Leichtbaustrukturen zu verbessern, liegt es nahe, statt regelmäßige und auf geometrischen Prinzipien basierende Faltmuster umzusetzen, Faltmuster nach Maßgabe der Beanspruchungen bzw. der Beanspruchungsverteilung anzuwenden. Hierzu ist ein Formfindungsprozess zu entwickeln, der auf der Generierung eines Trajektoriennetzes basiert, das aus dem maßgeblichen Lastfall (formgebenden Lastfall) abgeleitet wird. Der Vergleich des Masseneinsatzes und der Traglast der Faltungen, die auf geometrischer Basis erzeugt wurden, mit einer auf Basis des Trajektoriennetzes entwickelten Faltung zeigt die Veränderung der Effizienz.},

year = {2019}

}

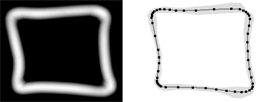

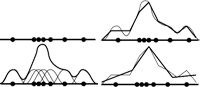

Structured Discrete Shape Approximation: Theoretical Complexity and Practical Algorithm

We consider the problem of approximating a two-dimensional shape contour (or curve segment) using discrete assembly systems, which allow to build geometric structures based on limited sets of node and edge types subject to edge length and orientation restrictions. We show that already deciding feasibility of such approximation problems is NP-hard, and remains intractable even for very simple setups. We then devise an algorithmic framework that combines shape sampling with exact cardinality-minimization to obtain good approximations using few components. As a particular application and showcase example, we discuss approximating shape contours using the classical Zometool construction kit and provide promising computational results, demonstrating that our algorithm is capable of obtaining good shape representations within reasonable time, in spite of the problem's general intractability. We conclude the paper with an outlook on possible extensions of the developed methodology, in particular regarding 3D shape approximation tasks.

Code available per request.

@article{TILLMANN2021101795,

title = {Structured discrete shape approximation: Theoretical complexity and practical algorithm},

journal = {Computational Geometry},

volume = {99},

pages = {101795},

year = {2021},

issn = {0925-7721},

doi = {https://doi.org/10.1016/j.comgeo.2021.101795},

url = {https://www.sciencedirect.com/science/article/pii/S0925772121000511},

author = {Andreas M. Tillmann and Leif Kobbelt},

keywords = {Shape approximation, Discrete assembly systems, Computational complexity, Mixed-integer programming, Zometool},

abstract = {We consider the problem of approximating a two-dimensional shape contour (or curve segment) using discrete assembly systems, which allow to build geometric structures based on limited sets of node and edge types subject to edge length and orientation restrictions. We show that already deciding feasibility of such approximation problems is NP-hard, and remains intractable even for very simple setups. We then devise an algorithmic framework that combines shape sampling with exact cardinality minimization to obtain good approximations using few components. As a particular application and showcase example, we discuss approximating shape contours using the classical Zometool construction kit and provide promising computational results, demonstrating that our algorithm is capable of obtaining good shape representations within reasonable time, in spite of the problem's general intractability. We conclude the paper with an outlook on possible extensions of the developed methodology, in particular regarding 3D shape approximation tasks.}

}

Feature Curve Co-Completion in Noisy Data

Feature curves on 3D shapes provide important hints about significant parts of the geometry and reveal their underlying structure. However, when we process real world data, automatically detected feature curves are affected by measurement uncertainty, missing data, and sampling resolution, leading to noisy, fragmented, and incomplete feature curve networks. These artifacts make further processing unreliable. In this paper we analyze the global co-occurrence information in noisy feature curve networks to fill in missing data and suppress weakly supported feature curves. For this we propose an unsupervised approach to find meaningful structure within the incomplete data by detecting multiple occurrences of feature curve configurations (co-occurrence analysis). We cluster and merge these into feature curve templates, which we leverage to identify strongly supported feature curve segments as well as to complete missing data in the feature curve network. In the presence of significant noise, previous approaches had to resort to user input, while our method performs fully automatic feature curve co-completion. Finding feature reoccurrences however, is challenging since naive feature curve comparison fails in this setting due to fragmentation and partial overlaps of curve segments. To tackle this problem we propose a robust method for partial curve matching. This provides us with the means to apply symmetry detection methods to identify co-occurring configurations. Finally, Bayesian model selection enables us to detect and group re-occurrences that describe the data well and with low redundancy.

@inproceedings{gehre2018feature,

title={Feature Curve Co-Completion in Noisy Data},

author={Gehre, Anne and Lim, Isaak and Kobbelt, Leif},

booktitle={Computer Graphics Forum},

volume={37},

number={2},

year={2018},

organization={Wiley Online Library}

}

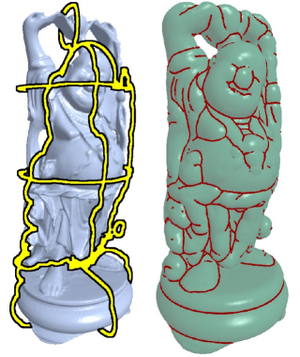

Interactive Curve Constrained Functional Maps

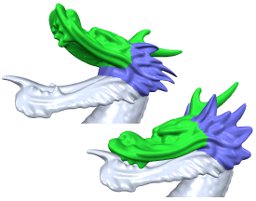

Functional maps have gained popularity as a versatile framework for representing intrinsic correspondence between 3D shapes using algebraic machinery. A key ingredient for this framework is the ability to find pairs of corresponding functions (typically, feature descriptors) across the shapes. This is a challenging problem on its own, and when the shapes are strongly non-isometric, nearly impossible to solve automatically. In this paper, we use feature curve correspondences to provide flexible abstractions of semantically similar parts of non-isometric shapes. We design a user interface implementing an interactive process for constructing shape correspondence, allowing the user to update the functional map at interactive rates by introducing feature curve correspondences. We add feature curve preservation constraints to the functional map framework and propose an efficient numerical method to optimize the map with immediate feedback. Experimental results show that our approach establishes correspondences between geometrically diverse shapes with just a few clicks.

@article{Gehre:2018:InteractiveFunctionalMaps,

author = "Gehre, Anne and Bronstein, Michael and Kobbelt, Leif and Solomon, Justin",

title = "Interactive Curve Constrained Functional Maps",

journal = "Computer Graphics Forum",

volume = 37,

number = 5,

year = 2018

}

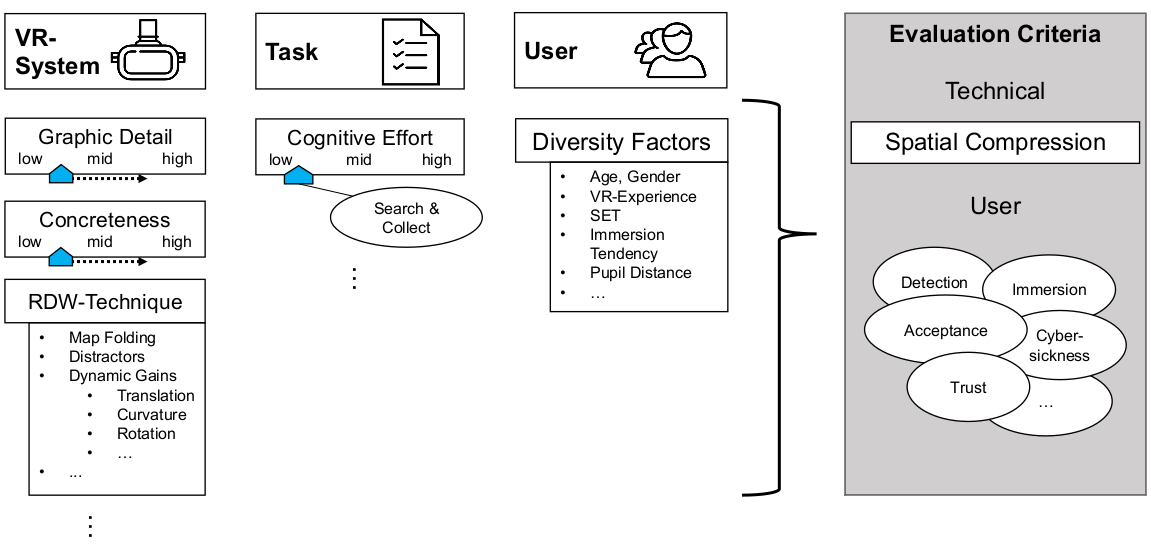

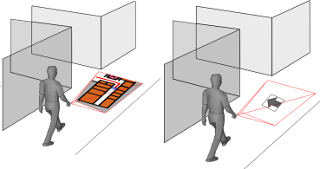

You Spin my Head Right Round: Threshold of Limited Immersion for Rotation Gains in Redirected Walking

In virtual environments, the space that can be explored by real walking is limited by the size of the tracked area. To enable unimpeded walking through large virtual spaces in small real-world surroundings, redirection techniques are used. These unnoticeably manipulate the user’s virtual walking trajectory. It is important to know how strongly such techniques can be applied without the user noticing the manipulation—or getting cybersick. Previously, this was estimated by measuring a detection threshold (DT) in highly-controlled psychophysical studies, which experimentally isolate the effect but do not aim for perceived immersion in the context of VR applications. While these studies suggest that only relatively low degrees of manipulation are tolerable, we claim that, besides establishing detection thresholds, it is important to know when the user’s immersion breaks. We hypothesize that the degree of unnoticed manipulation is significantly different from the detection threshold when the user is immersed in a task. We conducted three studies: a) to devise an experimental paradigm to measure the threshold of limited immersion (TLI), b) to measure the TLI for slowly decreasing and increasing rotation gains, and c) to establish a baseline of cybersickness for our experimental setup. For rotation gains greater than 1.0, we found that immersion breaks quite late after the gain is detectable. However, for gains lesser than 1.0, some users reported a break of immersion even before established detection thresholds were reached. Apparently, the developed metric measures an additional quality of user experience. This article contributes to the development of effective spatial compression methods by utilizing the break of immersion as a benchmark for redirection techniques.

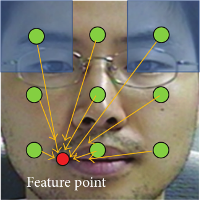

A Simple Approach to Intrinsic Correspondence Learning on Unstructured 3D Meshes